Metrics do not take up much storage (compared to logs), but ingesting and querying them requires appropriate resources. Node sizing depends on:

- Number of metrics

- Scraping / push interval

- Retention period

With such information at hand, you can refer to the performance envelope for sizing guidelines.

How to measure a workload’s metrics rate

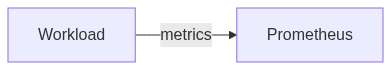

Deploy your solution, and relate workload under test to prometheus, for example:

Be sure to relate to prometheus only the workload(s) you’re measuring the rate for.

Usually a 48hr view of the following expressions should give a good idea about the total number of metrics:

- Total number of metrics:

count({__name__=~".+"})- As a sanity check, compare to the number of metrics your workload exposes:

curl example.internal/metrics | grep -v '^# ' | wc -l.

- As a sanity check, compare to the number of metrics your workload exposes:

- The actual disk usage of metrics depends on the number of labels, scrape interval and compaction configuration. You can see the rate of compaction for the last 48 hours by running the following query on the prometheus instance:

rate(prometheus_tsdb_compaction_chunk_size_bytes_sum[48h])

Ideally, your entire solution uses the same scrape interval (default: 1 min), which can be used to calculate the metrics rate.