Hello All,

I think I am hitting the error describe in this thread, however I am not sure. The bridges i defined for ovn-chassis never get created. When I tail the ovn-chassis log I see the following error.

unit-ovn-chassis-0: 20:36:15 INFO unit.ovn-chassis/0.juju-log ovsdb:74: Invoking reactive handler: reactive/ovn_chassis_charm_handlers.py:83:configure_ovs

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-get"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-get"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-list"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-get"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-get"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-get"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-get"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-get"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-ids"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "relation-get"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "juju-log"

unit-ovn-chassis-0: 20:36:15 INFO unit.ovn-chassis/0.juju-log ovsdb:74: CompletedProcess(args=('ovs-vsctl', '--no-wait', 'set-ssl', '/etc/ovn/key_host', '/etc/ovn/cert_host', '/etc/ovn/ovn-chassis.crt'), returncode=0, stdout='')

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "network-get"

unit-ovn-chassis-0: 20:36:15 DEBUG jujuc running hook tool "juju-log"

unit-ovn-chassis-0: 20:36:15 ERROR unit.ovn-chassis/0.juju-log ovsdb:74: Hook error:

Traceback (most recent call last):

File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charms/reactive/__init__.py", line 74, in main

bus.dispatch(restricted=restricted_mode)

File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charms/reactive/bus.py", line 390, in dispatch

_invoke(other_handlers)

File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charms/reactive/bus.py", line 359, in _invoke

handler.invoke()

File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charms/reactive/bus.py", line 181, in invoke

self._action(*args)

File "/var/lib/juju/agents/unit-ovn-chassis-0/charm/reactive/ovn_chassis_charm_handlers.py", line 93, in configure_ovs

charm_instance.configure_ovs(','.join(ovsdb.db_sb_connection_strs))

File "lib/charms/ovn_charm.py", line 467, in configure_ovs

.format(self.get_data_ip()), '--',

File "lib/charms/ovn_charm.py", line 366, in get_data_ip

ch_core.hookenv.network_get(

File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charmhelpers/core/hookenv.py", line 1390, in network_get

response = subprocess.check_output(

File "/usr/lib/python3.8/subprocess.py", line 411, in check_output

return run(*popenargs, stdout=PIPE, timeout=timeout, check=True,

File "/usr/lib/python3.8/subprocess.py", line 512, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['network-get', 'data', '--format', 'yaml']' returned non-zero exit status 1.

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed Traceback (most recent call last):

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "/var/lib/juju/agents/unit-ovn-chassis-0/charm/hooks/ovsdb-relation-changed", line 22, in <module>

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed main()

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charms/reactive/__init__.py", line 74, in main

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed bus.dispatch(restricted=restricted_mode)

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charms/reactive/bus.py", line 390, in dispatch

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed _invoke(other_handlers)

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charms/reactive/bus.py", line 359, in _invoke

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed handler.invoke()

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charms/reactive/bus.py", line 181, in invoke

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed self._action(*args)

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "/var/lib/juju/agents/unit-ovn-chassis-0/charm/reactive/ovn_chassis_charm_handlers.py", line 93, in configure_ovs

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed charm_instance.configure_ovs(','.join(ovsdb.db_sb_connection_strs))

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "lib/charms/ovn_charm.py", line 467, in configure_ovs

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed .format(self.get_data_ip()), '--',

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "lib/charms/ovn_charm.py", line 366, in get_data_ip

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed ch_core.hookenv.network_get(

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "/var/lib/juju/agents/unit-ovn-chassis-0/.venv/lib/python3.8/site-packages/charmhelpers/core/hookenv.py", line 1390, in network_get

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed response = subprocess.check_output(

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "/usr/lib/python3.8/subprocess.py", line 411, in check_output

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed return run(*popenargs, stdout=PIPE, timeout=timeout, check=True,

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed File "/usr/lib/python3.8/subprocess.py", line 512, in run

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed raise CalledProcessError(retcode, process.args,

unit-ovn-chassis-0: 20:36:15 DEBUG unit.ovn-chassis/0.ovsdb-relation-changed subprocess.CalledProcessError: Command '['network-get', 'data', '--format', 'yaml']' returned non-zero exit status 1.

unit-ovn-chassis-0: 20:36:16 ERROR juju.worker.uniter.operation hook "ovsdb-relation-changed" (via explicit, bespoke hook script) failed: exit status 1

unit-ovn-chassis-0: 20:36:16 DEBUG juju.machinelock machine lock released for uniter (run relation-changed (74; unit: ovn-central/2) hook)

unit-ovn-chassis-0: 20:36:16 DEBUG juju.worker.uniter.operation lock released

unit-ovn-chassis-0: 20:36:16 INFO juju.worker.uniter awaiting error resolution for "relation-changed" hook

unit-ovn-chassis-0: 20:36:16 DEBUG juju.worker.uniter [AGENT-STATUS] error: hook failed: "ovsdb-relation-changed"

the relevant charm configs in my bundle are as such:

variables:

# Network Spaces

# This is Management network, unrelated to OpenStack and other applications

# OAM - Operations, Administration and Maintenance

maas: &maas maas-mgmt

# This is OpenStack Admin network; for adminURL endpoints

admin: &admin os-mgmt

# This is OpenStack Public network; for publicURL endpoints

public: &public os-ext-api

# This is OpenStack Internal network; for internalURL endpoints

internal: &internal os-int-api

# This is Shared Database network; for mysql-routers

shared-db: &shared-db os-mgmt

# This is the overlay network(s)

compute: &compute os-compute

compute-ext: &compute-ext os-cloudpublic

applications:

ovn-central:

charm: 'cs:ovn-central'

num_units: 3

series: focal

to:

- 'lxd:0'

- 'lxd:1'

- 'lxd:2'

bindings:

"": *admin

ovsdb: *shared-db

ovsdb-cms: *shared-db

ovsdb-peer: *shared-db

ovn-chassis:

charm: 'cs:ovn-chassis'

options:

bridge-interface-mappings: 'br-cloudpublic:eno2 br-compute:eno2.4001'

ovn-bridge-mappings: 'os-cloudpublic:br-cloudpublic os-compute:br-compute'

series: focal

bindings:

"": *admin

ovsdb: *shared-db

data: *compute

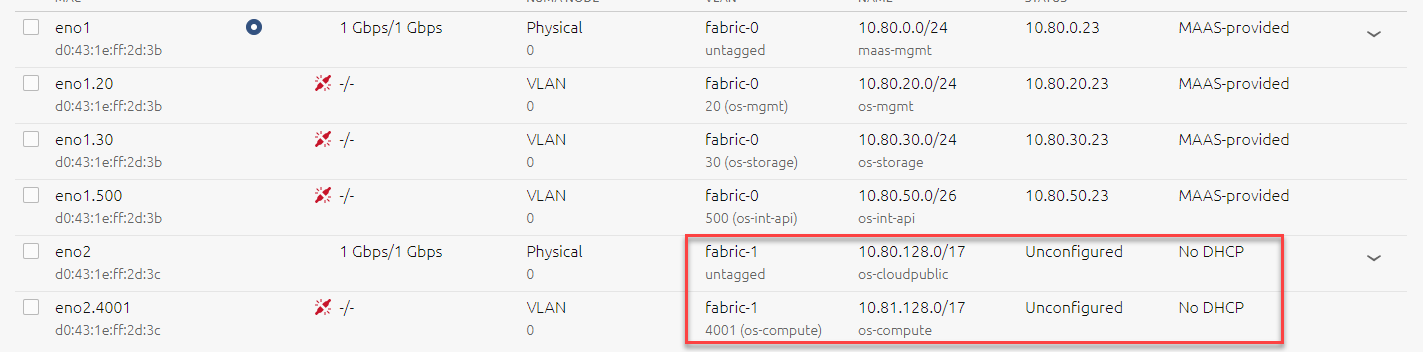

In MAAS, I configured each compute node’s interface as such:

I’m at a loss, any ideas?