See also: How to upgrade your Juju deployment from

2.9to3.x

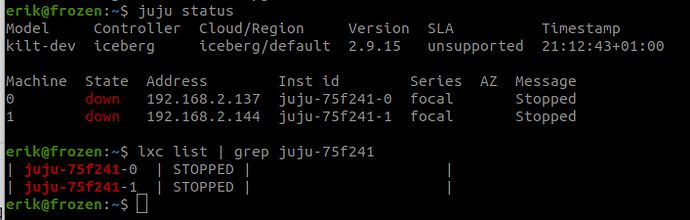

Juju 3 is out and people are wondering about how to upgrade existing 2.9 models to 3.x. The answer is model migration. Upgrading in place is possible in some, but not all, circumstances and can me more prone to losing access to multiple models if something goes wrong.

The general approach for model migration is simple on the surface:

- bootstrap a 3.x controller

- ensure the 2.9 controller and all hosted models are at version 2.9.37 or higher

- migrate models across from old 2.9.x controller to new 3.x controller

- upgrade the migrated models to 3.x

Note: to upgrade the migrated models, you’ll need Juju 3.0.2 client (currently in 3.0/candidate).

See also Juju | Upgrading things.

However, there’s a bit more to it - the 2.9 controller will have:

- controller configuration

- clouds

- cloud config

- users

- user credentials

- user permissions (access grants to the controller itself, models, clouds)

that need to come across to the new 3.x controller. This is where the juju-restore utility comes in handy. This utility has a --copy-controller option which can be used to “clone” the core data from an existing controller into a new, empty controller.

Note: the new 3.x controller retains its own CA certificate and other such set-once config - only config which is able to be modified by the end user is copied across.

To get started, you’ll need the juju-restore utility found here.

Then:

- Create a backup of the 2.9 controller using

juju create-backup - Bootstrap a new 3.x controller and do not add any models to it (it must be empty)

-

juju scpthe backup tarball and juju-restore utility the 3.x controller -

juju sshinto the 3.x controller - Run

juju-restore --copy-controller <backup-tarball>

See also Juju | How to manage a controller.

The admin user who maintains the 2.9 and 3.x controllers has access to both. They can now start migrating models using juju migrate <modelname> <3.x-controllername>.

Existing (ordinary) users who may have created the models, or who had been granted access to the models, will be prompted to transition to the new controller the next time they run juju status or some other command against the model.

Note: for the transition of users to the new 3.x controller to be seamless (interactively managed by Juju), the old 2.9 controller needs to be kept up and running so that the users’ Juju client can connect to it and be redirected to the migrated model(s) on the new 3.x controller.

Once a 2.9 model has been migrated to the new 3.x controller, the model itself can be upgraded to the same version as the controller. Say the controller is running Juju 3.0.2, then:

juju upgrade-model <modelname> --agent-version=3.0.2 will upgrade the model.

Note: we expect the next Juju 3.x point release to automatically choose the controller version without the need for the --agent-version arg.

Note: we expect Juju 3.1 to upgrade the migrated model to match the controller automatically.

The main remaining caveat is that ordinary users cannot easily get access to the new 3.x controller until the admin user migrates at least one model across. This is because the ordinary user’s Juju client does not know about the new controller connection details (until a migration redirection happens). The way around this, not yet implemented, would be for the juju add-user command to be able to regenerate the registration string a second time for an existing user - that user could juju register and set up access to the new controller and migrate their own models across. We can look to implement this as well.

If you try this and notice for your scenario anything has been overlooked in terms of copying across the core 2.9 controller details to the new 3.x controller, let us know and we’ll look into it.