The following are some results I took after measuring running times for charmcraft pack using Charmcraft 1.4.0 in different situations (numbers).

The first runs are for a super simple (but complete) charm: just a bunch of small files with one Python dependency:

$ tree

.

├── charmcraft.yaml

├── metadata.yaml

├── requirements.txt

├── secrets.txt

├── src

│ └── charm.py

├── to_be_included.bin

└── version

1 directory, 7 files

$ cat charmcraft.yaml

type: charm

bases:

- name: ubuntu

channel: "20.04"

parts:

charm:

prime:

- to_be_included.*

$ cat requirements.txt

ops

$

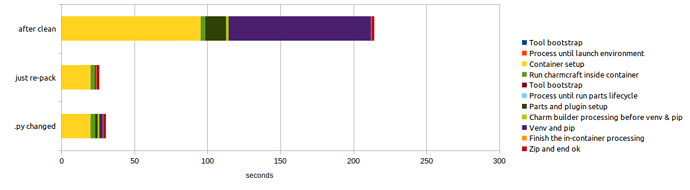

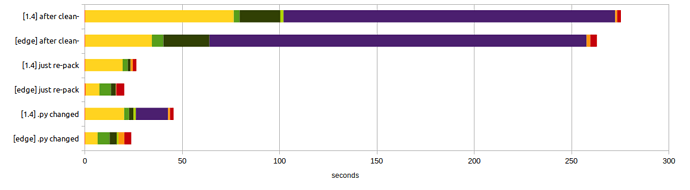

The following graph shows the times obtained from running pack after the clean command, then just running pack again, and finally running it again after modifying the charm.py file (which triggers a re-build).

Some analysis:

-

The container setup takes a lot of time. 44% of the total time for the run “after clean”, and 76% and 65% for the subsequent runs. We already been analyzing logs and we will improve these times (there are different parts to improve in the “first time” and “subsequent runs” scenarios).

-

The preparation for the parts lifecycle takes a noticeable time when the system is clean (mostly installing needed system packages and dependencies), but after that it’s not an issue.

-

The virtual environment creation and dependencies installation (which involves all compilations) take a lot of time when the system is clean (46% of the total), but then is not a problem on re-packings. It’s just not run on a simple re-pack, but for normal situations where the charm code is changed it will be run but super fast as it detects that nothing needs to be updated (see below a comment about this, though).

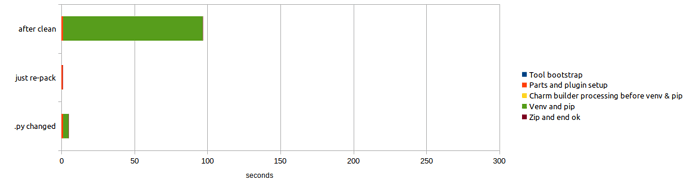

To isolate the measurements from all the container management that is done automatically by Charmcraft, I exercised the same three steps using --destructive-mode:

We can see there that almost all time is taken in the Python dependencies compilation and installation, being the re-packings super fast.

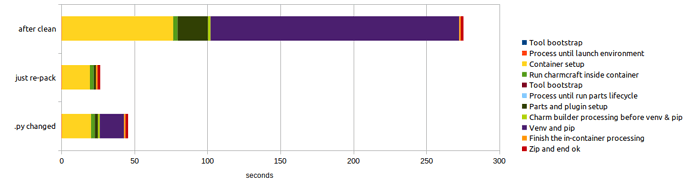

I also measured times for a more complex real-life charm:

One detail to observe in this graph is that the Python dependencies processing (for the case of a simple .py changed) takes noticeable more than in the simple charm case. This is a result of how one dependency is specified in the requirements file (see this speed-up recommendation for more details.

Beyond that, the measurements produced timings very similar to the ones of the simple charm, so the planned improvements would also apply here.

(To be continued…)

Pack profiling, the sequel

After results shown above, we improved different internal processes regarding to the pack command.

The following are the results I took after measuring running times for that command using charmcraft 1.5.0+39.g4957b01.dirty (currently in the edge channel, will be widely available as version 1.6), feel free to check the detailed numbers.

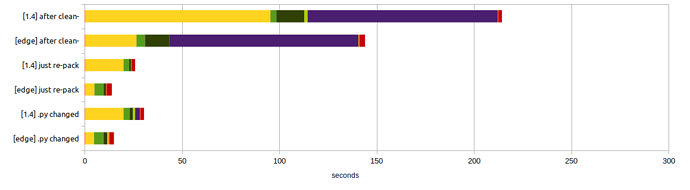

The first runs are for a super simple but complete charm (explained in detail above). The following graph compares the same situations than before using also the new version:

We can see the performance improvements in the container setup, which was the biggest factor in total times after initial run. The virtual environment creation and dependencies compilation and installation still takes a lot of time in the first run, we need to understand if we can do something about that (as it’s actually a process handled by Python’s venv module and pip itself).

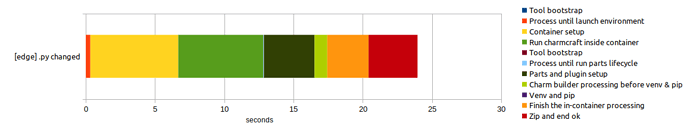

The next graph compares the numbers when packing the grafana-k8s-operator charm before and using the latest Charmcraft version:

In this comparison we can see the aforementioned improvements in the container setup, but also is clearly visible a change we did regarding the dependencies management: when they didn’t change, we just keep previously installed ones and do not even call venv and pip. So previously when only a .py changed, pip was executed just to recognize that all dependencies were up to date and do nothing about them, with the exception of one pointing to GitHub which was the responsible of big part of the processing time; now that step is just skipped and that time saved.

Note that this second profiling shows a slight slowdown in all steps across the packing. As we didn’t change at all the rest of the processing, I can only explain this because different measuring conditions (I run everything in my laptop, each profiling measurements being consistent with each other, but the first profiling happened several weeks ago and laptop conditions surely are not the same than the second one).

In any case, after this first batch of improvements which took the biggest numbers down, we need to sharpen our pencil and start analyzing what happens in all the parts of the packing process, specially for the most common case which affects human time in the charm development:

The plan is to instrument the code, which will allow us to measure the tool behaviour across changes and ensure we always get better, and also give the option for final users to export those timings and give feedback to us for particular environments or packing corner cases.