Welcome to Charmed OSM

The objective of this page is to give an overview of the first steps to get started with Charmed OSM.

Requirements

- OS: Ubuntu 18.04 LTS (Bionic) or Ubuntu 20.04 LTS (Focal)

- MINIMUM:

- 4 CPUs

- 8 GB RAM

- 50GB disk

- Single interface with Internet access.

- RECOMMENDED:

- 8 CPUs

- 16 GB RAM

- 120GB disk

- Single interface with Internet access.

User Guide

Installing OSM has never been easier. With one command, you will be able to deploy Charmed OSM in an empty environment using microk8s.

First of all, let’s download the installation script for upstream, and give it executable permissions.

wget https://osm-download.etsi.org/ftp/osm-11.0-eleven/install_osm.sh

chmod +x install_osm.sh

Install

To install Charmed OSM locally you should execute the following command:

./install_osm.sh --charmed

Checking the status

While the installer is running, you will see progress in the number of active services, which can take several minutes depending on the internet connection speed. While this is running, to see the status of the deployment execute watch -c juju status --color. Also, you can execute watch kubectl -n osm get pods to see the status of the Kubernetes Pods.

You will see this output from the juju status command when the deployment is finished.

$ juju status

Model Controller Cloud/Region Version SLA Timestamp

osm osm-vca microk8s/localhost 2.9.17 unsupported 14:32:14+01:00

App Version Status Scale Charm Store Channel Rev OS Address Message

grafana ubuntu/grafana@8952155 active 1 grafana charmstore edge 10 kubernetes 10.152.183.111

kafka wurstmeister/kafka@968e579 active 1 kafka charmstore edge 1 kubernetes 10.152.183.203

keystone opensourcemano/keystone:11 active 1 keystone charmstore edge 16 kubernetes 10.152.183.103

lcm opensourcemano/lcm:11 active 1 lcm charmstore edge 15 kubernetes 10.152.183.211

mariadb-k8s mariadb/server:10.3 active 1 mariadb-k8s charmstore stable 35 kubernetes 10.152.183.246

mon opensourcemano/mon:11 active 1 mon charmstore edge 12 kubernetes 10.152.183.249

mongodb library/mongo@11b4907 active 1 mongodb-k8s charmhub stable 1 kubernetes 10.152.183.122

nbi opensourcemano/nbi:11 active 1 nbi charmstore edge 18 kubernetes 10.152.183.217

ng-ui opensourcemano/ng-ui:11 active 1 ng-ui charmstore edge 25 kubernetes 10.152.183.108

pla opensourcemano/pla:11 active 1 pla charmstore edge 13 kubernetes 10.152.183.83

pol opensourcemano/pol:11 active 1 pol charmstore edge 10 kubernetes 10.152.183.181

prometheus .../prometheus-backup@f3ddf1f active 1 prometheus charmstore edge 9 kubernetes 10.152.183.53

ro opensourcemano/ro:11 active 1 ro charmstore edge 10 kubernetes 10.152.183.84

zookeeper .../kubernetes-zookeeper@35... active 1 zookeeper charmstore edge 1 kubernetes 10.152.183.235

Unit Workload Agent Address Ports Message

grafana/0* active idle 10.1.245.122 3000/TCP ready

kafka/0* active idle 10.1.245.115 9092/TCP ready

keystone/0* active idle 10.1.245.119 5000/TCP ready

lcm/0* active idle 10.1.245.86 9999/TCP ready

mariadb-k8s/0* active idle 10.1.245.107 3306/TCP ready

mon/1* active idle 10.1.245.83 8000/TCP ready

mongodb/0* active idle 10.1.245.124 27017/TCP

nbi/0* active idle 10.1.245.105 9999/TCP ready

ng-ui/0* active idle 10.1.245.98 80/TCP ready

pla/0* active idle 10.1.245.75 9999/TCP ready

pol/0* active idle 10.1.245.68 9999/TCP ready

prometheus/0* active idle 10.1.245.79 9090/TCP ready

ro/0* active idle 10.1.245.104 9090/TCP ready

zookeeper/0* active idle 10.1.245.84 2181/TCP,2888/TCP,3888/TCP ready

Known issues

None.

Start playing with OSM

If you have installed OSM in an external machine, or in a VM, you can access it through the kubernetes ingress controller enabled in microk8s. These are the services exposed:

- OSM UI: https://ui.<ip>.nip.io

- NBI: https://nbi.<ip>.nip.io

- Prometheus: https://prometheus.<ip>.nip.io

- Grafana: https://grafana.<ip>.nip.io

Note: <ip> should be replaced by the IP of the default network interface in your OSM Machine

OSM User Interface

Access from outside the machine at http://ui.<ip>.nip.io, or at http://10.152.183.108 (see ng-ui cluster IP in juju status) from inside the OSM Machine.

- Username: admin

- Password: admin

OSM Client

The OSM client is automatically installed with Charmed OSM, but if you want to install it in a separate machine, you can easily do that installing the osmclient snap:

sudo snap install osmclient --channel 11.0/stable

The OSM client needs the OSM_HOSTNAME environment variable pointing to the NBI.

export OSM_HOSTNAME=nbi.<ip>.nip.io:443

osm --help # print all the commands

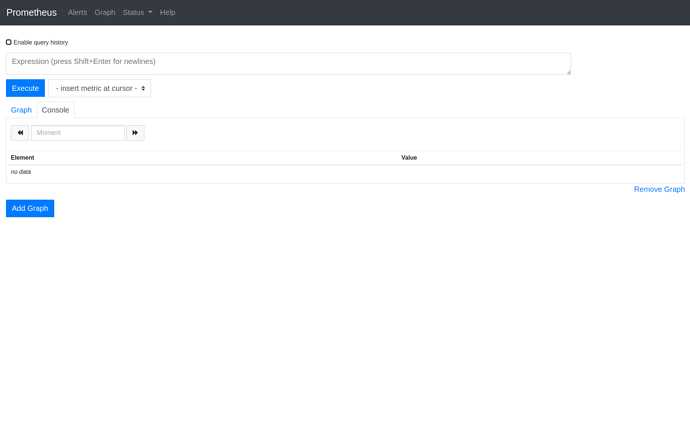

Prometheus

Access Prometheus User Interface from outside the machine at http://prometheus.<ip>.nip.io, or at http://10.152.183.53:9090 (see prometheus cluster IP in juju status) from inside the OSM Machine.

- Username: admin

- Password: admin

Grafana

Access Grafana User Interface from outside the machine at http://grafana.<ip>.nip.io, or at http://10.152.183.111:3000 (see grafana cluster IP in juju status) from inside the OSM Machine.

- Username: admin

- Password: Execute this command to get the password:

juju run --unit grafana/0 -- "echo \$GF_SECURITY_ADMIN_PASSWORD".

Uninstall

To uninstall Charmed OSM you should execute the following command.

juju kill-controller osm-vca -y -t0

Note: this will not remove microk8s or juju even if they were installed by the installer. Removal of these components can be done manually once it is known that no other services require them with the following commands:

sudo snap remove --purge juju

sudo snap remove --purge microk8s

Troubleshooting

If you have any trouble with the installation, please contact us, we will be glad to answer your questions:

- Guillermo Calviño guillermo.calvino@canonical.com

- David Garcia david.garcia@canonical.com

- Eduardo Sousa eduardo.sousa@canonical.com

- Mark Beierl mark.beierl@canonical.com