Waiting for CI is painful

Does this sound familiar?

- You push a commit with minor changes

- CI is slow, so you start working on something else

- A couple hours later, you remember to check the CI. It failed and you don’t remember why you made the changes

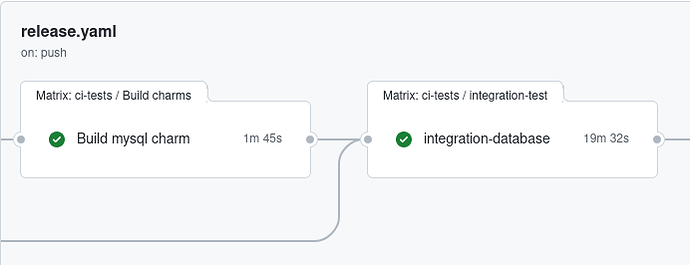

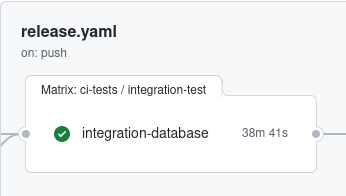

For the mysql charm, our integration tests took around an hour or longer. By utilising caching in GitHub Actions we were able to reduce this by around a third.

Before caching

After caching

Caching charmcraft pack

By default, charmcraft pack uses an LXC container to build your charm. On your local machine, this container is reused—after you pack your charm once, charmcraft pack is much quicker. On GitHub CI, it creates a new container for each runner.

If we cache the LXC container on GitHub CI, charmcraft pack can reuse the container.

Update

Update: The steps below are now outdated. We’ve replaced GitHub caching with charmcraftcache.

(outdated) Step 1: Cache the LXC container

Using GitHub actions/cache, we save a new LXC container when charmcraft.yaml, actions.yaml, or requirements.txt is modified.

GitHub has a 10 GiB cache limit per repository (if exceeded, GitHub deletes the oldest cache). Each LXC container is around 0.5–1.0 GB. For the mysql charm, our integration tests use 4 charms (including different Ubuntu versions).

When a pull request is opened, a cache can only be restored from the default branch (main). If we exceed the 10 GiB limit and the cache on main is deleted, new pull requests cannot restore a cache. To avoid this, we delete & restore the cache on main once per day.

All of this is handled by the reusable GitHub workflow we created: build_charms_with_cache.yaml

You can use it in your own GitHub workflows with this:

jobs:

build:

name: Build charms

uses: canonical/data-platform-workflows/.github/workflows/build_charms_with_cache.yaml@v2

permissions:

actions: write # Needed to manage GitHub Actions cache

The build_charms_with_cache.yaml workflow finds all charms in the GitHub repository, runs charmcraft pack for all versions, and uploads each *.charm file to a GitHub artifact.

(outdated) Step 2: Download the *.charm file in the integration tests

Add these steps to your integration test job:

jobs:

build:

…

integration-test:

name: Integration tests

needs:

- build

steps:

- name: Checkout

- name: Download packed charm(s)

uses: actions/download-artifact@v3

with:

name: ${{ needs.build.outputs.artifact-name }}

- name: Run integration test

run: tox run -e integration

env:

CI_PACKED_CHARMS: ${{ needs.build.outputs.charms }}

Before we added caching to the mysql charm CI, our integration tests used ops_test.build_charm() to pack the charm.

To avoid changing the integration tests, we can override the ops_test pytest fixture (from pytest-operator) in tests/integration/conftest.py

# Copyright 2022 Canonical Ltd.

import json

import os

from pathlib import Path

import pytest

from pytest_operator.plugin import OpsTest

@pytest.fixture(scope="module")

def ops_test(ops_test: OpsTest) -> OpsTest:

if os.environ.get("CI") == "true":

# Running in GitHub CI; skip build step

# (GitHub CI uses a separate, cached build step)

packed_charms = json.loads(os.environ["CI_PACKED_CHARMS"])

async def build_charm(charm_path, bases_index: int = None) -> Path:

for charm in packed_charms:

if Path(charm_path) == Path(charm["directory_path"]):

if bases_index is None or bases_index == charm["bases_index"]:

return charm["file_path"]

raise ValueError(f"Unable to find .charm file for {bases_index=} at {charm_path=}")

ops_test.build_charm = build_charm

return ops_test

If a test runs in GitHub CI, any calls to ops_test.build_charm() will return the path to the downloaded *.charm file instead of packing the charm.

Finally, if you’re using tox, pass in the CI and CI_PACKED_CHARMS environment variables. Example tox.ini:

[testenv:integration]

pass_env =

{[testenv]pass_env}

CI

CI_PACKED_CHARMS

For a complete example, look at this pull request: mysql-k8s-operator#146.

Further improvements

See this post: Faster CI results by running integration tests in parallel