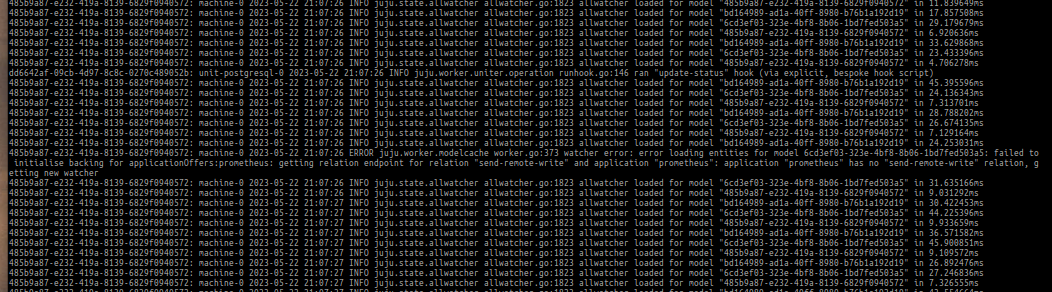

So, my controller is totally dead and I need to tear it down. This is the last log from the controller restart (which doesn’t recover or start).

tail -f /var/log/juju/machine-0.log

2023-05-25 17:52:01 INFO juju.state.allwatcher allwatcher.go:1823 allwatcher loaded for model "f720b50a-0ff1-4264-855b-3969806d415e" in 7.533894ms

2023-05-25 17:52:01 ERROR juju.worker.modelcache worker.go:373 watcher error: error loading entities for model 6cd3ef03-323e-4bf8-8b06-1bd7fed503a5: failed to initialise backing for applicationOffers:prometheus: getting relation endpoint for relation "send-remote-write" and application "prometheus": application "prometheus" has no "send-remote-write" relation, getting new watcher

2023-05-25 17:52:01 INFO juju.state.allwatcher allwatcher.go:1823 allwatcher loaded for model "6cd3ef03-323e-4bf8-8b06-1bd7fed503a5" in 39.683876ms

2023-05-25 17:52:01 INFO juju.state.allwatcher allwatcher.go:1823 allwatcher loaded for model "485b9a87-e232-419a-8139-6829f0940572" in 13.184189ms

2023-05-25 17:52:01 INFO juju.state.allwatcher allwatcher.go:1823 allwatcher loaded for model "f720b50a-0ff1-4264-855b-3969806d415e" in 8.064403ms

cat /var/snap/juju-db/common/logs/mongodb.log.2023-05-25T17-49-16

{"t":{"$date":"2023-05-25T17:49:15.455+00:00"},"s":"I", "c":"CONTROL", "id":23285, "ctx":"main","msg":"Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none'"}

{"t":{"$date":"2023-05-25T17:49:15.456+00:00"},"s":"I", "c":"NETWORK", "id":4648601, "ctx":"main","msg":"Implicit TCP FastOpen unavailable. If TCP FastOpen is required, set tcpFastOpenServer, tcpFastOpenClient, and tcpFastOpenQueueSize."}

{"t":{"$date":"2023-05-25T17:49:15.528+00:00"},"s":"I", "c":"STORAGE", "id":4615611, "ctx":"initandlisten","msg":"MongoDB starting","attr":{"pid":25175,"port":37017,"dbPath":"/var/snap/juju-db/common/db","architecture":"64-bit","host":"juju-940572-0"}}

{"t":{"$date":"2023-05-25T17:49:15.528+00:00"},"s":"I", "c":"CONTROL", "id":23403, "ctx":"initandlisten","msg":"Build Info","attr":{"buildInfo":{"version":"4.4.18","gitVersion":"8ed32b5c2c68ebe7f8ae2ebe8d23f36037a17dea","openSSLVersion":"OpenSSL 1.1.1f 31 Mar 2020","modules":[],"allocator":"tcmalloc","environment":{"distarch":"x86_64","target_arch":"x86_64"}}}}

{"t":{"$date":"2023-05-25T17:49:15.528+00:00"},"s":"I", "c":"CONTROL", "id":51765, "ctx":"initandlisten","msg":"Operating System","attr":{"os":{"name":"Ubuntu","version":"20.04"}}}

{"t":{"$date":"2023-05-25T17:49:15.528+00:00"},"s":"I", "c":"CONTROL", "id":21951, "ctx":"initandlisten","msg":"Options set by command line","attr":{"options":{"config":"/var/snap/juju-db/common/juju-db.config","net":{"bindIp":"*","ipv6":true,"port":37017,"tls":{"certificateKeyFile":"/var/snap/juju-db/common/server.pem","certificateKeyFilePassword":"<password>","mode":"requireTLS"}},"operationProfiling":{"slowOpThresholdMs":1000},"replication":{"oplogSizeMB":1024,"replSet":"juju"},"security":{"authorization":"enabled","keyFile":"/var/snap/juju-db/common/shared-secret"},"storage":{"dbPath":"/var/snap/juju-db/common/db","engine":"wiredTiger","journal":{"enabled":true}},"systemLog":{"destination":"file","path":"/var/snap/juju-db/common/logs/mongodb.log","quiet":true}}}}

{"t":{"$date":"2023-05-25T17:49:15.529+00:00"},"s":"I", "c":"STORAGE", "id":22297, "ctx":"initandlisten","msg":"Using the XFS filesystem is strongly recommended with the WiredTiger storage engine. See http://dochub.mongodb.org/core/prodnotes-filesystem","tags":["startupWarnings"]}

{"t":{"$date":"2023-05-25T17:49:15.530+00:00"},"s":"I", "c":"STORAGE", "id":22315, "ctx":"initandlisten","msg":"Opening WiredTiger","attr":{"config":"create,cache_size=15495M,session_max=33000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000,close_scan_interval=10,close_handle_minimum=250),statistics_log=(wait=0),verbose=[recovery_progress,checkpoint_progress,compact_progress],"}}

{"t":{"$date":"2023-05-25T17:49:15.866+00:00"},"s":"I", "c":"CONTROL", "id":23377, "ctx":"SignalHandler","msg":"Received signal","attr":{"signal":15,"error":"Terminated"}}

{"t":{"$date":"2023-05-25T17:49:15.866+00:00"},"s":"I", "c":"CONTROL", "id":23378, "ctx":"SignalHandler","msg":"Signal was sent by kill(2)","attr":{"pid":1,"uid":0}}

{"t":{"$date":"2023-05-25T17:49:15.866+00:00"},"s":"I", "c":"CONTROL", "id":23381, "ctx":"SignalHandler","msg":"will terminate after current cmd ends"}

{"t":{"$date":"2023-05-25T17:49:15.866+00:00"},"s":"I", "c":"REPL", "id":4784900, "ctx":"SignalHandler","msg":"Stepping down the ReplicationCoordinator for shutdown","attr":{"waitTimeMillis":10000}}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"COMMAND", "id":4784901, "ctx":"SignalHandler","msg":"Shutting down the MirrorMaestro"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"SHARDING", "id":4784902, "ctx":"SignalHandler","msg":"Shutting down the WaitForMajorityService"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"NETWORK", "id":20562, "ctx":"SignalHandler","msg":"Shutdown: going to close listening sockets"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"NETWORK", "id":4784905, "ctx":"SignalHandler","msg":"Shutting down the global connection pool"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"STORAGE", "id":4784906, "ctx":"SignalHandler","msg":"Shutting down the FlowControlTicketholder"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"-", "id":20520, "ctx":"SignalHandler","msg":"Stopping further Flow Control ticket acquisitions."}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"REPL", "id":4784907, "ctx":"SignalHandler","msg":"Shutting down the replica set node executor"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"NETWORK", "id":4784918, "ctx":"SignalHandler","msg":"Shutting down the ReplicaSetMonitor"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"SHARDING", "id":4784921, "ctx":"SignalHandler","msg":"Shutting down the MigrationUtilExecutor"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"CONTROL", "id":4784925, "ctx":"SignalHandler","msg":"Shutting down free monitoring"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"STORAGE", "id":4784927, "ctx":"SignalHandler","msg":"Shutting down the HealthLog"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"STORAGE", "id":4784929, "ctx":"SignalHandler","msg":"Acquiring the global lock for shutdown"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"-", "id":4784931, "ctx":"SignalHandler","msg":"Dropping the scope cache for shutdown"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"FTDC", "id":4784926, "ctx":"SignalHandler","msg":"Shutting down full-time data capture"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"CONTROL", "id":20565, "ctx":"SignalHandler","msg":"Now exiting"}

{"t":{"$date":"2023-05-25T17:49:15.867+00:00"},"s":"I", "c":"CONTROL", "id":23138, "ctx":"SignalHandler","msg":"Shutting down","attr":{"exitCode":0}}