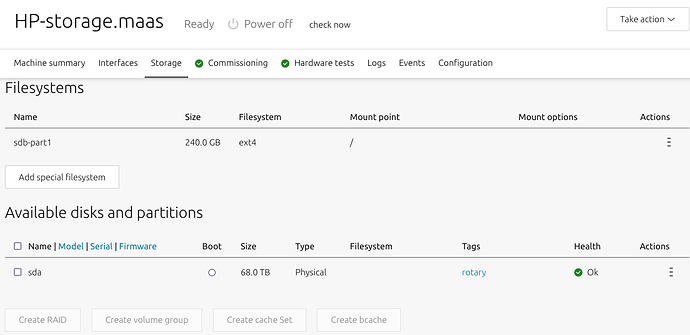

Thanks. So what you want to do is deploy a ceph-osd unit onto the target machine. However, you said that the output (screenshot) is for “another server”. We need to know precisely which server we are deploying onto. Let’s assume it is the MAAS node named HP-storage.maas

In the manual install steps I would install the first ceph-osd unit in this way:

juju deploy --config ceph-osd.yaml --to HP-storage.maas ceph-osd

Where file ceph-osd.yaml contains the configuration:

ceph-osd:

osd-devices: /dev/sda

source: cloud:focal-wallaby

Alternatively, you can create a “tag” in the MAAS web UI like ceph-osd-hp, assign it to the server, and then:

juju deploy --config ceph-osd.yaml --constraints tags=ceph-osd-hp ceph-osd

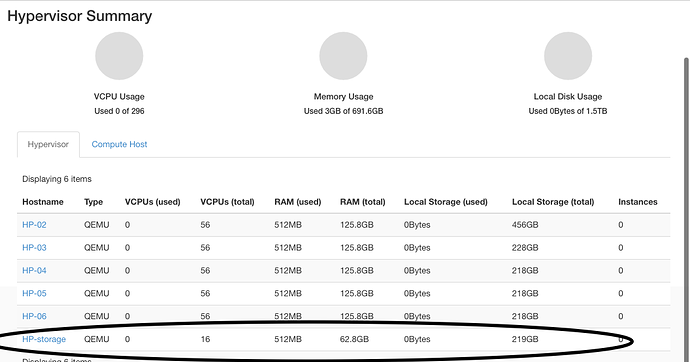

Normally we want more than one OSD storage node however.

If you had three identical HP servers tagged in this way then you could add multiple with one invocation:

juju deploy -n 3 --config ceph-osd.yaml --constraints tags=ceph-osd-hp ceph-osd

To scale out manually using other available servers:

juju add-unit ceph-osd

If two are available:

juju add-unit -n 2 ceph-osd

If these other servers have different block devices (e.g. /dev/sdb) then just alter the ceph-osd application’s configuration by changing this line in the ceph-osd.yaml file:

osd-devices: /dev/sda /dev/sdb

Note that the ceph-osd charm will not overwrite a disk currently in use.

Then apply the configuration change (the new units will be notified automatically):

juju config --file ceph-osd.yaml ceph-osd

When it comes time to deploy ceph-mon, make sure you set option expected-osd-count appropriately where the count is the sum of all block devices used as OSD disks in the Ceph cluster (you can have more than one OSD per server).