When the author of jhack meets the author of Scenario, interesting things happen!

Jhack offers a tool called snapshot. You should be able to reach the entry point by typing jhack scenario snapshot in a shell.

Snapshot used to be shipped with scenario but has been removed in v5.6.1. We moved it over to jhack, where it is available since v0.3.23.

Snapshot’s purpose is to gather the State data structure from a real, live charm running in some cloud your local juju client has access to. This is handy in case:

- you want to write a test about the state the charm you’re developing is currently in

- your charm is bork or in some inconsistent state, and you want to write a test to check the charm will handle it correctly the next time around (aka regression testing)

- you are new to Scenario and want to quickly get started with a real-life example.

Example: gathering state from prometheus-k8s

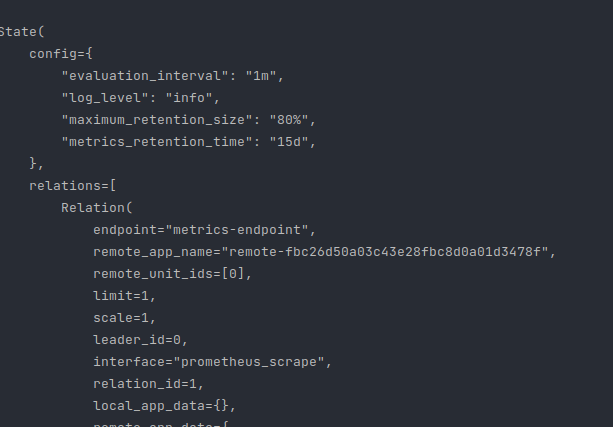

Suppose you have a Juju model with a prometheus-k8s unit deployed as prometheus-k8s/0. If you type scenario snapshot prometheus-k8s/0, you will get a printout of the State object.

Copy-paste that in some file, import all you need from scenario, and you have a working State that you can .trigger() events from.

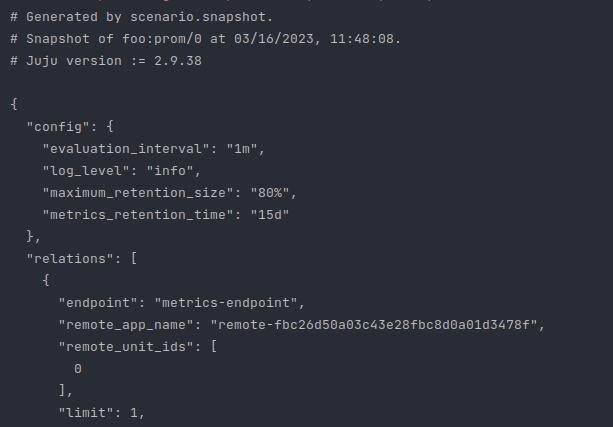

You can also pass a --format json | pytest flag to obtain respectively:

- a jsonified

Statedata structure (a plaindataclasses.asdictpreprocessing step in fact), for portability

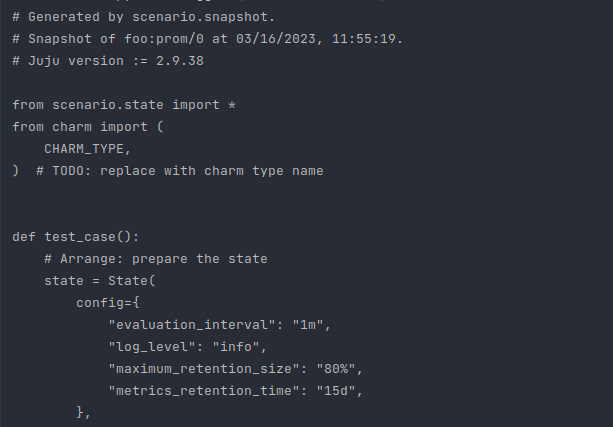

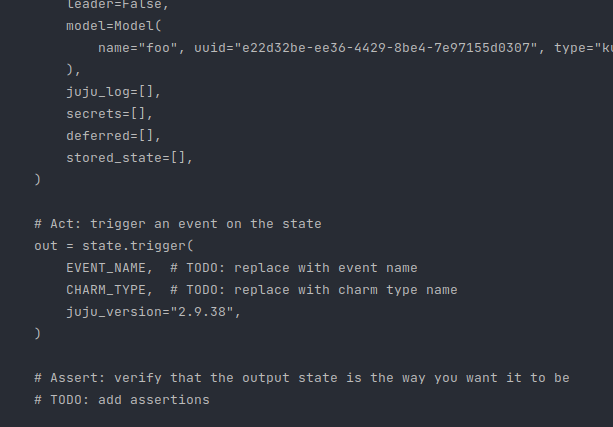

- a full-fledged pytest test case (with imports and all), where you only have to fill in the charm type and the event that you wish to trigger.

[…]

Pipe that out to a file, and you have your unit-test!

Snapshot is fairly complete, only secrets still need to be implemented, but expect, out of the box:

- config

- networks

- relations (and relation data)

- containers for k8s charms

- deferred events

- stored state

- status

- model metadata

- leadership

With some setup, you can also fetch files from kubernetes workload containers (think: application config files, etc…) and see them assigned automatically to the container mounts they’re at!