Hi charmers! As it often happens, what started as a little project of mine horribly grew out of proportion and what started as a hacky way to simulate a ‘real’ charm execution locally (with ‘real’ data captured on a live unit: see jhack replay), became a new framework for writing charm unit tests.

The project is called ops-scenario and in the future, if ops becomes a pure namespace package, we could be exposing it as an ops.scenario import. For now, you can pip install ops-scenario and import from scenario.

https://pypi.org/project/ops-scenario/

Core idea

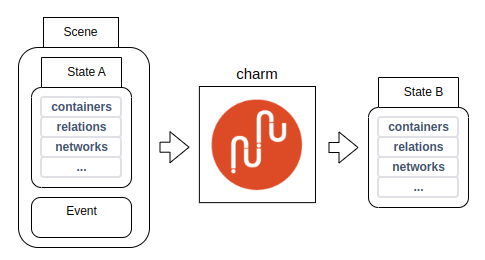

While the built-in, ops-provided Harness has an imperative API where the test writer incrementally drives an initial blank state towards a final state by adding relations, injecting mock data, and so on, each state mutation triggering a separate event on the charm, the core vision of scenario tests is to look at each event as an atomic state transition. The charm is then viewed as a black-box, a simple function charm(state_in) -> state_out:

This encourages to write tests which are more tightly encapsulated, and discourages the writing of tests that imply, suggest, or – even worse – depend on the ordering of the sequence of events one is testing. Each event has to be taken in full isolation.

Core ideas:

- Each event triggers a charm execution. There is no charm execution without an event.

- Each execution brings with it a fully-specified initial state (a closed-world assumption regarding the Juju state, if you like).

- The main place to run assertions on is the output state; in particular, the diff with the input state.

- A secondary place to put assertions is an in-context hook which allows you to call charm methods and interact with the world from the POV of the charm before the simulation is torn down.

Core goals:

- provide a stricter execution environment isolation: each charm execution has a fresh state, environment, charm instance, etc…

- provide a tighter, more true-to-nature simulation layer than Harness does, without sacrificing speed: make it possible to replace more integration tests with unit tests (although it will obviously never fully replace them).

- make it easier to reason about a charm’s runtime and what it means to test it.

- provide a monolithic data structure representing ‘the state’ of the charm in the context of a given event/execution, to aid debugging and make charm executions reproducible.

Strictly defined Arrange -> Act -> Assert tests.

The Harness’ model allows tests with a loose structure:

def test_foo():

# Arrange

h = Harness(MyCharm)

# Act

h.mock_this_bit()

# Assert

assert h.charm.is_happy()

# Act

h.mock_this_other_bit()

# Act again

h.mock_this_yet_different_bit()

# Assert

assert h.charm.is_still_happy()

...

Note that the charm instance remains the same between events and asserts.

Scenario tests aim at nudging you towards a stricter test structure:

def test_foo():

# Arrange

state_in = State(relations=[...], leader=True, containers=[])

# Act

state_out = state_in.trigger('update-status', MyCharm)

# Assert

assert state_out.delta(state_in) == [...] # things you expect to have changed.

Next steps for you

If you like the idea, the API, the project, the story: stay tuned! The project will evolve fast and bring new ideas to the table.

To see what’s there, the documentation is at the moment on the gh repo. Like what you see? Start contributing. Find a bug? File an issue.

Have fun!

Next steps for us

Not all parts of the juju world are modelled yet in ops-scenario. We’re still working on implementing the first few batches of scenario tests, and we’ll fill in the missing parts as we go.

We’re also still evolving the API and things might take a little while to settle, so please pin your versions.

One of my long-term goals is to re-couple this with jhack replay to enable the following workflow (and similar):

- install replay on a (failing) CI job

- download the serialized states on which the charm borked

- feed those states into scenario tests, for debugging and regression testing