I’ve struck a situation where I’m using my remote LXD cluster to deploy a charm and trying to deploy a charm that makes use of storage.

juju deploy ./nextcloud-private.charm --storage data=1G

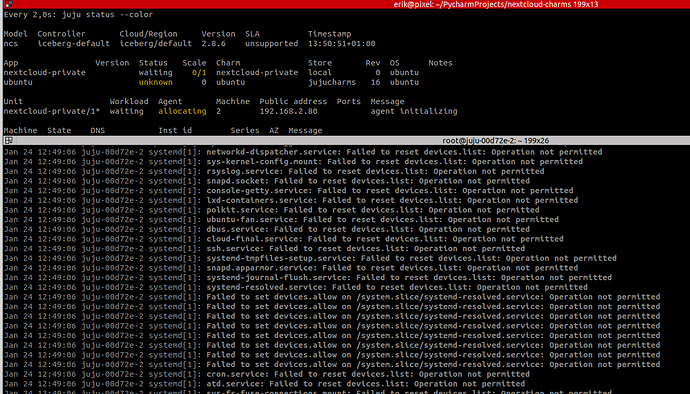

I suspect that there is something on the permission level here which leave the unit-agent stuck in “allocating” → “agent initializing”. (As seen in the picture above)

I’m using juju snap client 2.8.7-focal-amd64

server lxd is:

lxd 4.0.4 19032 4.0/stable/… canonical✓ -

Does anyone have experience with deploying charms with storage on LXD?

The same thing works perfectly on AWS, so I suspect this has something to do with the LXD cloud itself.

Should I file a bug somewhere?

1 Like

I get the same problem, same charm but different machine.

Update:

On AWS, adding storage works in deployment phase. Storage attaches and installation works.

But, when I remove the unit and try to re-use the disk:

juju add-unit nextcloud --attach-storage=data/0

The same stuck situation occurs with “agent initializing” (see image)

The disk (1G) seems attached to the running instance though…

root@ip-172-31-23-20:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 31.1M 1 loop /snap/snapd/10707

loop1 7:1 0 55.4M 1 loop /snap/core18/1944

loop2 7:2 0 32.3M 1 loop /snap/amazon-ssm-agent/2996

nvme0n1 259:0 0 8G 0 disk

└─nvme0n1p1 259:1 0 8G 0 part /

nvme1n1 259:2 0 1G 0 disk <----------- This disk is the one re-used

@wallyworld … what and where should we look for the problem here?

1 Like

I tried to reproduce this using the postgresql charm on an AWS model in the eu-north-1 region, but had no luck getting the problem to occur. ie the add-unit --attach-storage worked fine.

What you are seeing is that Juju will pause the unit agent during the initialisation phase until all storage is attached. You can verify this by running

juju show-status-log nextcloud/1

and seeing that the data-storage-attached hook has not yet run.

Juju will create and attach the requested EBS volume and then attempts to match that newly mounted volume with the storage record stored in the Juju model. Juju doesn’t know ahead of time exactly how a requested volume will be mounted, so it gathers info on all mounted volumes using lsblk and then does a udevadm query to look at detailed attributes of those volumes - these are sent back to the controller to find the machine block device which corresponds to the record in the Juju model.

In the case of AWS, when a volume is created, there’s a volume ID. And then when the volume gets attached, there’s a device link named like this:

/dev/disk/by-id/nvme-Amazon_Elastic_Block_Store_volxxxxxxx

So Juju looks at the device link of all attached volumes till it finds one it expects and associates that matching volume with the storage record in the Juju data model.

If Juju cannot find an expected volume attachment on a machine, the storage will remain pending and the unit will remain blocked on initialising.

juju status --storage --format yaml will give a little more detail.

There’s also logging that Juju does when trying to match volume attachments on a machine with the storage record in the Juju model. You can turn this on and see where the matching is failing.

juju model-config logging-config="juju.apiserver.storagecommon=TRACE;<root>=INFO"

I’m deploying a new nextcloud charm rewritten in the new operator framework.

You can find the code for it here

https://github.com/erik78se/nextcloud-charms/tree/master/charm-nextcloud-private

Something is going wrong here and I can’t figure out why yet. Also the charm getting stuck at “agent initializing” with little more info tells me that it’s slamming into something more fundamental.

The charm seems to be missing the nextcloud-0.0.1-py3-none-any.whl wheel file listed in requirements.txt

@joakimnyman found some more details. The lib should be available if you run

make build

The charm should contain the nextcloud package

Sorry if I’m missing something but there’s no Makefile that I can see in the source code of the charm from a previous comment. So make build isn’t a thing it seems?

charmcraft build fails because the requirements.txt file refer to a wheel that doesn’t exist.

1 Like

Oh right thanks. I was just looking in the charm-nextcloud-private directory itself, mistakenly thinking the charm was self contained. The charm builds fine, I’ll deploy it tomorrow when I’m back at work and see if I can reproduce.

1 Like

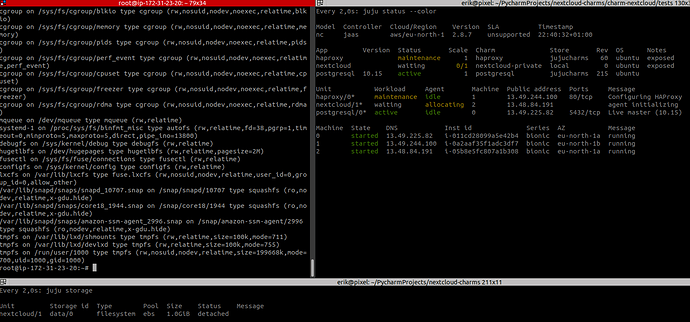

I wasn’t able to reproduce. Can you provide the logs with trace enabled for juju.apiserver.storagecommon package?

My steps:

/snap/bin/juju bootstrap aws/eu-north-1

/snap/bin/juju model-config logging-config="juju.apiserver.storagecommon=TRACE;<root>=INFO"

/snap/bin/juju deploy ./nextcloud-private.charm --storage data=1G -n 2

/snap/bin/juju status --storage

/snap/bin/juju remove-unit nextcloud-private/0

/snap/bin/juju add-unit nextcloud-private --attach-storage=data/0

/snap/bin/juju status --storage

$ /snap/bin/juju status --storage

Model Controller Cloud/Region Version SLA Timestamp

controller test aws/eu-north-1 2.8.7 unsupported 07:29:31+10:00

App Version Status Scale Charm Store Rev OS Notes

nextcloud-private blocked 2 nextcloud-private local 0 ubuntu

Unit Workload Agent Machine Public address Ports Message

nextcloud-private/1* blocked idle 2 13.53.41.202 No database available.

nextcloud-private/2 blocked idle 3 13.48.178.110 No database available.

Machine State DNS Inst id Series AZ Message

0 started 13.51.69.195 i-01927c7f0ed3429db bionic eu-north-1a running

2 started 13.53.41.202 i-0c0b62592d1b68af5 bionic eu-north-1b running

3 started 13.48.178.110 i-0cb74486417614431 bionic eu-north-1a running

Storage Unit Storage id Type Pool Mountpoint Size Status Message

nextcloud-private/1 data/1 filesystem ebs 1.0GiB attached

nextcloud-private/2 data/0 filesystem ebs 1.0GiB attached