Hello everyone!

I am trying to install Openstack Base on my lab of 3 HP G8 with 4 disks. 2x300 in raid1 /dev/sda for OS installation and 2x600 /dev/sdb, /dev/sdc for Openstack storage.

Before deploy I only change “osd-devices:” in openstack-base/bundle.yaml

osd-devices: &osd-devices /dev/sdb /dev/sdc

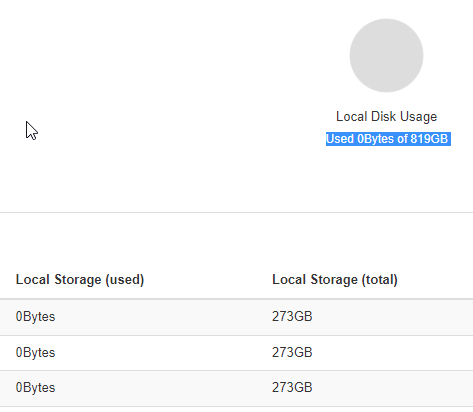

After reinstallation (due to radosgw issue) in juju status everything is green and active. But in Openstack horizon, only system disks are displayed in local storage.

Model Controller Cloud/Region Version SLA Timestamp

openstack juju-controller maas/default 2.9.15 unsupported 00:31:50+03:00

App Version Status Scale Charm Store Channel Rev OS Message

ceph-mon 16.2.4 active 3 ceph-mon charmstore stable 55 ubuntu Unit is ready and clustered

ceph-osd 16.2.4 active 3 ceph-osd charmstore stable 310 ubuntu Unit is ready (2 OSD)

ceph-radosgw 16.2.4 active 1 ceph-radosgw charmstore stable 296 ubuntu Unit is ready

cinder 18.0.0 active 1 cinder charmstore stable 310 ubuntu Unit is ready

cinder-ceph 18.0.0 active 1 cinder-ceph charmstore stable 262 ubuntu Unit is ready

cinder-mysql-router 8.0.26 active 1 mysql-router charmstore stable 8 ubuntu Unit is ready

dashboard-mysql-router 8.0.26 active 1 mysql-router charmstore stable 8 ubuntu Unit is ready

glance 22.0.0 active 1 glance charmstore stable 305 ubuntu Unit is ready

glance-mysql-router 8.0.26 active 1 mysql-router charmstore stable 8 ubuntu Unit is ready

keystone 19.0.0 active 1 keystone charmstore stable 323 ubuntu Application Ready

keystone-mysql-router 8.0.26 active 1 mysql-router charmstore stable 8 ubuntu Unit is ready

mysql-innodb-cluster 8.0.26 active 3 mysql-innodb-cluster charmstore stable 7 ubuntu Unit is ready: Mode: R/O, Cluster is ONLINE and can tolerate up to ONE failure.

neutron-api 18.1.0 active 1 neutron-api charmstore stable 294 ubuntu Unit is ready

neutron-api-plugin-ovn 18.1.0 active 1 neutron-api-plugin-ovn charmstore stable 6 ubuntu Unit is ready

neutron-mysql-router 8.0.26 active 1 mysql-router charmstore stable 8 ubuntu Unit is ready

nova-cloud-controller 23.0.2 active 1 nova-cloud-controller charmstore stable 355 ubuntu Unit is ready

nova-compute 23.0.2 active 3 nova-compute charmstore stable 327 ubuntu Unit is ready

nova-mysql-router 8.0.26 active 1 mysql-router charmstore stable 8 ubuntu Unit is ready

ntp 3.5 active 3 ntp charmstore stable 45 ubuntu chrony: Ready

openstack-dashboard 19.2.0 active 1 openstack-dashboard charmstore stable 313 ubuntu Unit is ready

ovn-central 20.12.0 active 3 ovn-central charmstore stable 7 ubuntu Unit is ready (northd: active)

ovn-chassis 20.12.0 active 3 ovn-chassis charmstore stable 14 ubuntu Unit is ready

placement 5.0.1 active 1 placement charmstore stable 19 ubuntu Unit is ready

placement-mysql-router 8.0.26 active 1 mysql-router charmstore stable 8 ubuntu Unit is ready

rabbitmq-server 3.8.2 active 1 rabbitmq-server charmstore stable 110 ubuntu Unit is ready

vault 1.5.9 active 1 vault charmstore stable 46 ubuntu Unit is ready (active: true, mlock: disabled)

vault-mysql-router 8.0.26 active 1 mysql-router charmstore stable 8 ubuntu Unit is ready

Unit Workload Agent Machine Public address Ports Message

ceph-mon/0 active idle 0/lxd/0 10.50.103.116 Unit is ready and clustered

ceph-mon/1* active idle 1/lxd/0 10.50.103.111 Unit is ready and clustered

ceph-mon/2 active idle 2/lxd/0 10.50.103.124 Unit is ready and clustered

ceph-osd/0 active idle 0 10.50.103.98 Unit is ready (2 OSD)

ceph-osd/1* active idle 1 10.50.103.107 Unit is ready (2 OSD)

ceph-osd/2 active idle 2 10.50.103.93 Unit is ready (2 OSD)

ceph-radosgw/0* active idle 0/lxd/1 10.50.103.118 80/tcp Unit is ready

cinder/0* active idle 1/lxd/1 10.50.103.110 8776/tcp Unit is ready

cinder-ceph/0* active idle 10.50.103.110 Unit is ready

cinder-mysql-router/0* active idle 10.50.103.110 Unit is ready

glance/0* active idle 2/lxd/1 10.50.103.121 9292/tcp Unit is ready

glance-mysql-router/0* active idle 10.50.103.121 Unit is ready

keystone/0* active idle 0/lxd/2 10.50.103.120 5000/tcp Unit is ready

keystone-mysql-router/0* active idle 10.50.103.120 Unit is ready

mysql-innodb-cluster/0 active idle 0/lxd/3 10.50.103.119 Unit is ready: Mode: R/O, Cluster is ONLINE and can tolerate up to ONE failure.

mysql-innodb-cluster/1* active idle 1/lxd/2 10.50.103.108 Unit is ready: Mode: R/W, Cluster is ONLINE and can tolerate up to ONE failure.

mysql-innodb-cluster/2 active idle 2/lxd/2 10.50.103.125 Unit is ready: Mode: R/O, Cluster is ONLINE and can tolerate up to ONE failure.

neutron-api/0* active idle 1/lxd/3 10.50.103.112 9696/tcp Unit is ready

neutron-api-plugin-ovn/0* active idle 10.50.103.112 Unit is ready

neutron-mysql-router/0* active idle 10.50.103.112 Unit is ready

nova-cloud-controller/0* active idle 0/lxd/4 10.50.103.117 8774/tcp,8775/tcp Unit is ready

nova-mysql-router/0* active idle 10.50.103.117 Unit is ready

nova-compute/0 active idle 0 10.50.103.98 Unit is ready

ntp/1 active idle 10.50.103.98 123/udp chrony: Ready

ovn-chassis/1 active idle 10.50.103.98 Unit is ready

nova-compute/1* active idle 1 10.50.103.107 Unit is ready

ntp/0* active idle 10.50.103.107 123/udp chrony: Ready

ovn-chassis/0* active idle 10.50.103.107 Unit is ready

nova-compute/2 active idle 2 10.50.103.93 Unit is ready

ntp/2 active idle 10.50.103.93 123/udp chrony: Ready

ovn-chassis/2 active idle 10.50.103.93 Unit is ready

openstack-dashboard/0* active idle 1/lxd/4 10.50.103.113 80/tcp,443/tcp Unit is ready

dashboard-mysql-router/0* active idle 10.50.103.113 Unit is ready

ovn-central/0 active idle 0/lxd/5 10.50.103.115 6641/tcp,6642/tcp Unit is ready (northd: active)

ovn-central/1* active idle 1/lxd/5 10.50.103.109 6641/tcp,6642/tcp Unit is ready (leader: ovnnb_db, ovnsb_db)

ovn-central/2 active idle 2/lxd/3 10.50.103.123 6641/tcp,6642/tcp Unit is ready

placement/0* active idle 2/lxd/4 10.50.103.126 8778/tcp Unit is ready

placement-mysql-router/0* active idle 10.50.103.126 Unit is ready

rabbitmq-server/0* active idle 2/lxd/5 10.50.103.122 5672/tcp Unit is ready

vault/0* active idle 0/lxd/6 10.50.103.114 8200/tcp Unit is ready (active: true, mlock: disabled)

vault-mysql-router/0* active idle 10.50.103.114 Unit is ready

Machine State DNS Inst id Series AZ Message

0 started 10.50.103.98 ms-osttest003 focal default Deployed

0/lxd/0 started 10.50.103.116 juju-9b141f-0-lxd-0 focal default Container started

0/lxd/1 started 10.50.103.118 juju-9b141f-0-lxd-1 focal default Container started

0/lxd/2 started 10.50.103.120 juju-9b141f-0-lxd-2 focal default Container started

0/lxd/3 started 10.50.103.119 juju-9b141f-0-lxd-3 focal default Container started

0/lxd/4 started 10.50.103.117 juju-9b141f-0-lxd-4 focal default Container started

0/lxd/5 started 10.50.103.115 juju-9b141f-0-lxd-5 focal default Container started

0/lxd/6 started 10.50.103.114 juju-9b141f-0-lxd-6 focal default Container started

1 started 10.50.103.107 ms-osttest002 focal default Deployed

1/lxd/0 started 10.50.103.111 juju-9b141f-1-lxd-0 focal default Container started

1/lxd/1 started 10.50.103.110 juju-9b141f-1-lxd-1 focal default Container started

1/lxd/2 started 10.50.103.108 juju-9b141f-1-lxd-2 focal default Container started

1/lxd/3 started 10.50.103.112 juju-9b141f-1-lxd-3 focal default Container started

1/lxd/4 started 10.50.103.113 juju-9b141f-1-lxd-4 focal default Container started

1/lxd/5 started 10.50.103.109 juju-9b141f-1-lxd-5 focal default Container started

2 started 10.50.103.93 ms-osttest001 focal default Deployed

2/lxd/0 started 10.50.103.124 juju-9b141f-2-lxd-0 focal default Container started

2/lxd/1 started 10.50.103.121 juju-9b141f-2-lxd-1 focal default Container started

2/lxd/2 started 10.50.103.125 juju-9b141f-2-lxd-2 focal default Container started

2/lxd/3 started 10.50.103.123 juju-9b141f-2-lxd-3 focal default Container started

2/lxd/4 started 10.50.103.126 juju-9b141f-2-lxd-4 focal default Container started

2/lxd/5 started 10.50.103.122 juju-9b141f-2-lxd-5 focal default Container started

Ceph status shows 3.3 TB storage.

osadmin@maas002:~$ juju ssh ceph-mon/0 sudo ceph status

cluster:

id: 2aa3bb5c-2593-11ec-83ff-5da0642f4c69

health: HEALTH_WARN

mons are allowing insecure global_id reclaim

clock skew detected on mon.juju-9b141f-0-lxd-0, mon.juju-9b141f-2-lxd-0

services:

mon: 3 daemons, quorum juju-9b141f-1-lxd-0,juju-9b141f-0-lxd-0,juju-9b141f-2-lxd-0 (age 17h)

mgr: juju-9b141f-1-lxd-0(active, since 17h), standbys: juju-9b141f-0-lxd-0, juju-9b141f-2-lxd-0

osd: 6 osds: 6 up (since 17h), 6 in (since 17h)

rgw: 1 daemon active (1 hosts, 1 zones)

data:

pools: 18 pools, 171 pgs

objects: 222 objects, 5.1 KiB

usage: 415 MiB used, 3.3 TiB / 3.3 TiB avail

pgs: 171 active+clean

Help please, in which direction to carry out the diagnosis.

This is my first Openstack