Kubernetes and Tensorflow are two technologies that have been incubated by Google and are rapidly becoming industry standards. The first promises to increase server density for hosted applications, the latter is a leading platform for developing machine learning applications. Kubernetes boosts your data center (virtual or otherwise) and Tensorflow boosts your data scientists.

If your organisation is looking to evaluate either of those tools, it’s possible to do so easily from within your own cloud. This allows you to test the waters without moving any proprietary data outside of the firewall or arranging for any external services to be commissioned.

This post walks you through using the open source software tool Juju to facilitate a working evaluation of these technologies.

Step 1: Install management tools

The first step is to install the necessary software, in this case Juju to manage the infrastructure and kubectl for interacting directly with the Kubernetes cluster that will be provisioned.

Installation instructions for Linux

In Linux, the fastest way to install Juju is via snap:

sudo snap install juju --classic

sudo snap install kubectl --classic</code>

Installation instructions for macOS

On macOS, we can use Homebrew to install Juju:

brew install juju

brew install kubectl

Installation instructions for MS Windows

On MS Windows, we can use the installer provided by Canonical to install Juju and follow the instructions provided by the Kubernetes project to install kubectl:

- Find the latest Juju installer on the project’s downloads page. Look for the file that matches the pattern

juju-setup-{version}-signed.exewith the most recent{version}. - Kubectl installation instructions

If you run into any trouble, through documentation is provided at these the two URLs below. You are also welcome to

- Installing Juju https://docs.jujucharms.com/2.5/en/reference-install

- Installing kubectl https://kubernetes.io/docs/tasks/tools/install-kubectl/

Step 2: Add your cloud

If you are running Juju behind the firewall, it may be necessary to tell it about your private cloud. Skip this step if you are using a public cloud provider.

Juju supports deployments using several organisational settings:

- An ad hoc cloud made up of computers accessible via SSH

- Bare metal servers managed by MAAS

- An OpenStack instance, whether as a private cloud or a public offering

- Clouds defined by VMware vSphere

Within a terminal window, run the juju add-cloud command:

| Input/Output | What we see on screen | Explanation |

|---|---|---|

| Input |

|

From the shell, we indicate that we want to add a cloud definition that will be accessible for all local controllers (--local).

|

| Output |

|

Juju takes us through an interactive session where it will ask us for details such as usernames and passwords. The specific questions depend on the cloud type in question. |

Note: if you would like specialist help for your situation, you are welcome to contact Canonical for personalised assistance.

Step 3: Add credentials

Once Juju has been installed, it needs to be authorised to act on your behalf. The juju autoload-credentials and juju add-credential commands assist here.

Detecting access credentials automatically

Juju can fetch authorisation credentials automatically for several cloud providers, include Amazon Web Services (AWS), Google Cloud Engine (GCE), private OpenStack clouds and LXD clusters. These providers store files within your home directory that can be detected by Juju.

juju autoload-credentials

Add access credentials manually

Juju supports dozens of cloud providers, including treating bare metal servers as a cloud.

juju add-credential <cloud>

Where <cloud> could be one of the following keywords:

| Cloud Provider | Juju <cloud> keyword |

|---|---|

| Amazon Web Services (AWS) | aws |

| Microsoft Azure | azure |

| Google Compute Engine (GCE) | google |

| Joyent Triton | joyent |

| Rackspace | rackspace |

| Oracle Cloud Infrastructure | oracle |

| CloudSigma | cloudsigma |

Step 4: Access help

If you get stuck at any stage, it can be useful to know where to turn to for help. Hopefully all readers will be able to skip this step!

You have several options available:

- In the shell, run

juju help <command>. Usually this documentation will get you unstuck. - On the web, we provide more thorough documentation

- You’re welcome to ask questions here on Juju’s Discourse forum. Tag your questions with the Users category. The forum is actively monitored by Juju’s developers.

- For those who prefer real-time communication, join the #juju channel on irc.freenode.net. Canonical staff who build Juju monitor this channel 24h and are happy to provide personal support on a best-effort basis.

- If you believe that you’ve found an error with Juju, you’re very welcome to file a bug report. This will require you to create an account with Launchpad, Juju’s bug and release management system.

- Commercial support is readily available. If you would like specialist help for your situation, please contact Canonical.

Step 5: Deploy a production-ready Kubernetes instance with a single line

Kubernetes is a complex software system that involves many moving parts. Juju takes care of that by providing a one line install by deploying a bundle:

Command to run

juju deploy canonical-kubernetesDeploying canonical-kubernetes enables the Charmed Distribution of Kubernetes. This is a production-ready Kubernetes distribution excellent support for artificial intelligence (AI), machine learning (ML) and high-performance computing (HPC) workloads.

Output from Juju

Located bundle "cs:bundle/canonical-kubernetes-499"

Resolving charm: cs:~containers/easyrsa-235

Resolving charm: cs:~containers/etcd-415

Resolving charm: cs:~containers/flannel-404

Resolving charm: cs:~containers/kubeapi-load-balancer-628

Resolving charm: cs:~containers/kubernetes-master-654

Resolving charm: cs:~containers/kubernetes-worker-519

Executing changes:

- upload charm cs:~containers/easyrsa-235 for series bionic

- deploy application easyrsa on bionic using cs:~containers/easyrsa-235

added resource easyrsa

- set annotations for easyrsa

- upload charm cs:~containers/etcd-415 for series bionic

- deploy application etcd on bionic using cs:~containers/etcd-415

added resource etcd

added resource snapshot

- set annotations for etcd

- upload charm cs:~containers/flannel-404 for series bionic

- deploy application flannel on bionic using cs:~containers/flannel-404

added resource flannel-amd64

added resource flannel-arm64

added resource flannel-s390x

- set annotations for flannel

- upload charm cs:~containers/kubeapi-load-balancer-628 for series bionic

- deploy application kubeapi-load-balancer on bionic using cs:~containers/kubeapi-load-balancer-628

- expose kubeapi-load-balancer

- set annotations for kubeapi-load-balancer

- upload charm cs:~containers/kubernetes-master-654 for series bionic

- deploy application kubernetes-master on bionic using cs:~containers/kubernetes-master-654

added resource cdk-addons

added resource kube-apiserver

added resource kube-controller-manager

added resource kube-proxy

added resource kube-scheduler

added resource kubectl

- set annotations for kubernetes-master

- upload charm cs:~containers/kubernetes-worker-519 for series bionic

- deploy application kubernetes-worker on bionic using cs:~containers/kubernetes-worker-519

added resource cni-amd64

added resource cni-arm64

added resource cni-s390x

added resource kube-proxy

added resource kubectl

added resource kubelet

- expose kubernetes-worker

- set annotations for kubernetes-worker

- add relation kubernetes-master:kube-api-endpoint - kubeapi-load-balancer:apiserver

- add relation kubernetes-master:loadbalancer - kubeapi-load-balancer:loadbalancer

- add relation kubernetes-master:kube-control - kubernetes-worker:kube-control

- add relation kubernetes-master:certificates - easyrsa:client

- add relation etcd:certificates - easyrsa:client

- add relation kubernetes-master:etcd - etcd:db

- add relation kubernetes-worker:certificates - easyrsa:client

- add relation kubernetes-worker:kube-api-endpoint - kubeapi-load-balancer:website

- add relation kubeapi-load-balancer:certificates - easyrsa:client

- add relation flannel:etcd - etcd:db

- add relation flannel:cni - kubernetes-master:cni

- add relation flannel:cni - kubernetes-worker:cni

- add unit easyrsa/0 to new machine 0

- add unit etcd/0 to new machine 1

- add unit etcd/1 to new machine 2

- add unit etcd/2 to new machine 3

- add unit kubeapi-load-balancer/0 to new machine 4

- add unit kubernetes-master/0 to new machine 5

- add unit kubernetes-master/1 to new machine 6

- add unit kubernetes-worker/0 to new machine 7

- add unit kubernetes-worker/1 to new machine 8

- add unit kubernetes-worker/2 to new machine 9

Deploy of bundle completed.This output details the steps that Juju intends to take on your behalf. The full deployment takes several minutes.

Step 6: Monitor the system

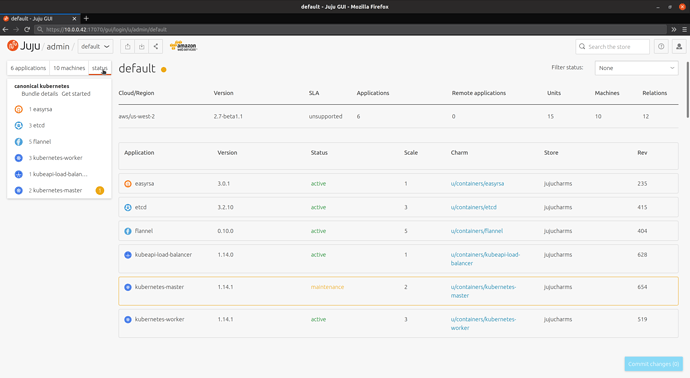

It’s often very useful to be able to get a sense of what’s actually happening in your cluster. Juju provides two main tools for this purpose: its status sub-command and the Juju GUI.

Getting status information from the command line

juju status

Output from Juju once Charmed Kubernetes has been successfully deployed. If you run juju status during the deployment, you’ll note that some of the applications are in the “blocked” and “waiting” state. Juju is working to install and configure all of the machines.

Model Controller Cloud/Region Version SLA Timestamp

default evaluate-k8s-tf aws/us-west-2 2.7-beta1.1 unsupported 09:19:48+12:00

App Version Status Scale Charm Store Rev OS Notes

easyrsa 3.0.1 active 1 easyrsa jujucharms 235 ubuntu

etcd 3.2.10 active 3 etcd jujucharms 415 ubuntu

flannel 0.10.0 active 5 flannel jujucharms 404 ubuntu

kubeapi-load-balancer 1.14.0 active 1 kubeapi-load-balancer jujucharms 628 ubuntu exposed

kubernetes-master 1.14.1 active 2 kubernetes-master jujucharms 654 ubuntu

kubernetes-worker 1.14.1 active 3 kubernetes-worker jujucharms 519 ubuntu exposed

Unit Workload Agent Machine Public address Ports Message

easyrsa/0* active idle 0 34.216.50.32 Certificate Authority connected.

etcd/0 active idle 1 52.26.135.252 2379/tcp Healthy with 3 known peers

etcd/1* active idle 2 54.244.152.22 2379/tcp Healthy with 3 known peers

etcd/2 active idle 3 34.209.200.17 2379/tcp Healthy with 3 known peers

kubeapi-load-balancer/0* active idle 4 18.236.235.50 443/tcp Loadbalancer ready.

kubernetes-master/0* active idle 5 54.148.34.194 6443/tcp Kubernetes master running.

flannel/3 active idle 54.148.34.194 Flannel subnet 10.1.39.1/24

kubernetes-master/1 active idle 6 34.221.36.250 6443/tcp Kubernetes master running.

flannel/4 active idle 34.221.36.250 Flannel subnet 10.1.24.1/24

kubernetes-worker/0 active idle 7 54.245.153.244 80/tcp,443/tcp Kubernetes worker running.

flannel/0* active idle 54.245.153.244 Flannel subnet 10.1.19.1/24

kubernetes-worker/1* active idle 8 35.165.101.160 80/tcp,443/tcp Kubernetes worker running.

flannel/1 active idle 35.165.101.160 Flannel subnet 10.1.66.1/24

kubernetes-worker/2 active idle 9 34.212.131.49 80/tcp,443/tcp Kubernetes worker running.

flannel/2 active idle 34.212.131.49 Flannel subnet 10.1.68.1/24

Machine State DNS Inst id Series AZ Message

0 started 34.216.50.32 i-0fc17714927269d2d bionic us-west-2a running

1 started 52.26.135.252 i-0502f998d448a7d7d bionic us-west-2a running

2 started 54.244.152.22 i-05a4c46bfeeb2aefb bionic us-west-2b running

3 started 34.209.200.17 i-09fa93f8c5a397984 bionic us-west-2c running

4 started 18.236.235.50 i-0d5329c014539a0ec bionic us-west-2c running

5 started 54.148.34.194 i-00918ab6392acd113 bionic us-west-2a running

6 started 34.221.36.250 i-014c611ece25eec0e bionic us-west-2b running

7 started 54.245.153.244 i-069086c871b70d1f9 bionic us-west-2a running

8 started 35.165.101.160 i-0a5d6de45d86efe26 bionic us-west-2b running

9 started 34.212.131.49 i-0f353dd1a7160469f bionic us-west-2c running

Accessing Juju status information via the web

An alternative for people who prefer visual output is Juju’s interactive web interface. To begin, ask Juju for the URL and credentials for connecting to the GUI.

juju gui

This command asks Juju for the URL and access details for the GUI. It responds:

GUI 2.14.0 for model "admin/default" is enabled at:

https://10.0.0.42:17070/gui/u/admin/default

Your login credential is:

username: admin

password: 5098c1a4ee3c1b791e825b85ea503e2c

Visiting this URL will probably raise a security warning in your browser. Juju has established its own certificate authority that the browser doesn’t know about. You should accept the warnings.

Once logged in, we can inspect the model’s status by clicking on the status tab. This will automatically refresh over time.

Step 7: Tell kubectl about your new Kubernetes

mkdir ~/.kube

juju scp kubernetes-master/0:config ~/.kube/config

We’re now able to test our local machine’s ability to connect:

kubectl cluster-info

Kubernetes master is running at https://13.211.252.172:443

Heapster is running at https://13.211.252.172:443/api/v1/namespaces/kube-system/services/heapster/proxy

CoreDNS is running at https://13.211.252.172:443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://13.211.252.172:443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy

Grafana is running at https://13.211.252.172:443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

InfluxDB is running at https://13.211.252.172:443/api/v1/namespaces/kube-system/services/monitoring-influxdb:http/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Step 8: Add a storage class

Using integrator charms

Azure, AWS and GCE have specialist charms that increase Juju’s affinity with the particular cloud APIS.

In Amazon AWS, the process looks like this:

juju deploy --to 0 aws-integrator

juju relate aws-integrator kubernetes-master

juju relate aws-integrator kubernetes-worker

juju trust aws-integrator

Manually defining a storage class

Without the integrator charms, we can use kubectl directly.

kubectl create -f - <<STORAGE

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: default

annotations:

storageclass.kubernetes.io/is-default-class: "true"

ovisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

STORAGE

storageclass.storage.k8s.io/default created

This storage class definition is specific to AWS. The Kubernetes documentation provides detailed information about defining storage classes for specific cloud providers.

Step 9: Enabled Juju to drive the Charmed Kubernetes we have created

We’re now in a position to create a Kubernetes cluster!

juju add-k8s k8s-tf --storage=default --region=aws/ap-southeast-2

k8s substrate "aws/ap-southeast-2" added as cloud "k8s-tf" with storage provisioned

by the existing "default" storage class

operator storage provisioned by the workload storage class.

Hurray! Juju knows about Charmed Kubernetes! We’ve called it k8s-tf

Step 10: Deploy Tensorflow on Kubernetes

Here is a view on on our current state:

- A cluster of machines being managed by Juju in the cloud, called

evaluate-k8s-tfby Juju - A Charmed Kubernetes instance deployed onto our cluster, called

k8s-tfin Juju

We now want to install Tensorflow on top of k8s-tf. To do that, we need to add a Juju “model”. Model is the term used by the Juju community, whereas others may use the term “stack”, “project” or “product”. A Juju model encapsulates all of the cloud components that are necessary as part of a Software as a Service offering.

juju add-model tf k8s-tf

Output from juju add-model tf-k8s-tf

Added 'tf' model on k8s-datascience with credential 'admin' for user 'admin'

Add a storage pool:

juju create-storage-pool k8s-ebs kubernetes storage-class=default

We’re now in a position where we can deploy kubeflow on top of our Kubernetes cluster.

juju deploy kubeflow

Output from juju deploy kubeflow

Located bundle "cs:bundle/kubeflow-46"

Resolving charm: cs:~kubeflow-charmers/kubeflow-ambassador

Resolving charm: cs:~kubeflow-charmers/kubeflow-jupyterhub

Resolving charm: cs:~kubeflow-charmers/kubeflow-pytorch-operator

Resolving charm: cs:~kubeflow-charmers/kubeflow-seldon-api-frontend

Resolving charm: cs:~kubeflow-charmers/kubeflow-seldon-cluster-manager

Resolving charm: cs:~kubeflow-charmers/kubeflow-tf-job-dashboard

Resolving charm: cs:~kubeflow-charmers/kubeflow-tf-job-operator

Resolving charm: cs:~kubeflow-charmers/redis-k8s

Executing changes:

- upload charm cs:~kubeflow-charmers/kubeflow-ambassador-46 for series kubernetes

- deploy application kubeflow-ambassador with 1 unit on kubernetes using cs:~kubeflow-charmers/kubeflow-ambassador-46

added resource ambassador-image

- set annotations for kubeflow-ambassador

- upload charm cs:~kubeflow-charmers/kubeflow-jupyterhub-42 for series kubernetes

- deploy application kubeflow-jupyterhub with 1 unit on kubernetes using cs:~kubeflow-charmers/kubeflow-jupyterhub-42

added resource jupyterhub-image

- set annotations for kubeflow-jupyterhub

- upload charm cs:~kubeflow-charmers/kubeflow-pytorch-operator-45 for series kubernetes

- deploy application kubeflow-pytorch-operator with 1 unit on kubernetes using cs:~kubeflow-charmers/kubeflow-pytorch-operator-45

added resource pytorch-operator-image

- set annotations for kubeflow-pytorch-operator

- upload charm cs:~kubeflow-charmers/kubeflow-seldon-api-frontend-31 for series kubernetes

- deploy application kubeflow-seldon-api-frontend with 1 unit on kubernetes using cs:~kubeflow-charmers/kubeflow-seldon-api-frontend-31

added resource api-frontend-image

- set annotations for kubeflow-seldon-api-frontend

- upload charm cs:~kubeflow-charmers/kubeflow-seldon-cluster-manager-29 for series kubernetes

- deploy application kubeflow-seldon-cluster-manager with 1 unit on kubernetes using cs:~kubeflow-charmers/kubeflow-seldon-cluster-manager-29

added resource cluster-manager-image

- set annotations for kubeflow-seldon-cluster-manager

- upload charm cs:~kubeflow-charmers/kubeflow-tf-job-dashboard-44 for series kubernetes

- deploy application kubeflow-tf-job-dashboard with 1 unit on kubernetes using cs:~kubeflow-charmers/kubeflow-tf-job-dashboard-44

added resource tf-operator-image

- set annotations for kubeflow-tf-job-dashboard

- upload charm cs:~kubeflow-charmers/kubeflow-tf-job-operator-43 for series kubernetes

- deploy application kubeflow-tf-job-operator with 1 unit on kubernetes using cs:~kubeflow-charmers/kubeflow-tf-job-operator-43

added resource tf-operator-image

- set annotations for kubeflow-tf-job-operator

- upload charm cs:~kubeflow-charmers/redis-k8s-6 for series kubernetes

- deploy application redis with 1 unit on kubernetes using cs:~kubeflow-charmers/redis-k8s-6

added resource redis-image

- set annotations for redis

- add relation kubeflow-tf-job-dashboard - kubeflow-ambassador

- add relation kubeflow-jupyterhub - kubeflow-ambassador

- add relation kubeflow-seldon-api-frontend - redis

- add relation kubeflow-seldon-cluster-manager - redis

Deploy of bundle completed.