Configuring Patroni-based PostgreSQL asynchronous replication

Warning: This is an internal article. Do not use it in production!

Contact the Canonical Data Platform team if you are interested in this topic.

Introduction

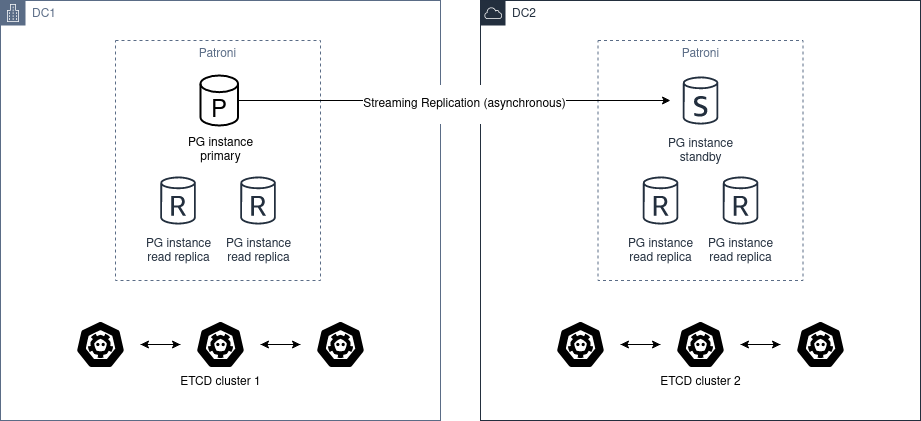

With only two data centers, it would be better to have two independent clusters and run Patroni standby cluster in the second data center. If the first site is down, you can MANUALLY promote the standby_cluster.

The architecture diagram would be the following:

Automatic promotion is not possible, because DC2 will never able to figure out the state of DC1.

Design

Let’s create 2x new relations and 2x new actions:

async-primaryrelation: this is the relation that represents the leader unit, it receives all the data from standby-clusters and generates the configuration for the leaderasync-replicarelation: relation used by each standby cluster to connect with the leader unitpromote-standby-clusteraction: this action informs one of the clusters that it should be promoted to a primarydemote-primary-clusteraction: likewise, cleans up the state and demotes the primary cluster.

UX

The UX is described as follows:

First, deploy two models in the same k8s cluster:

juju add-model psql-1

juju deploy postgresql-k8s --trust

juju add-model psql-2

juju deploy postgresql-k8s --trust

Then, configure async replication as follows:

juju switch psql-1

juju offer postgresql-k8s:async-primary async-primary # async-primary is the relation provided by the leader

juju switch psql-2

juju consume admin/psql-1.async-primary # consume the primary relation

Finally, set the relation and run the promotion action:

juju relate postgresql-k8s:async-replica async-primary # Both units are now related, where postgresql-k8s in model psql-2 is the standby-leader

juju run -m psql-1 postgresql-k8s/0 promote-standby-cluster # move postgresql-k8s in model psql-1 to be the leader cluster

Once the models settled, it is possible to check the status within one of the postgresql units.

For example, the following status can be seen in standby’s patroni:

$ PATRONI_KUBERNETES_LABELS='{application: patroni, cluster-name: patroni-postgresql-k8s}' \

PATRONI_KUBERNETES_NAMESPACE=psql-2 \

PATRONI_KUBERNETES_USE_ENDPOINTS=true \

PATRONI_NAME=postgresql-k8s-0 \

PATRONI_REPLICATION_USERNAME=replication \

PATRONI_SCOPE=patroni-postgresql-k8s \

PATRONI_SUPERUSER_USERNAME=operator \

patronictl -c /var/lib/postgresql/data/patroni.yml list

The role should be “Standby leader” and State should be “Running”.

Failover

The fail/switchover logic is the following:

First, we offer async-primary from both models:

juju switch psql-1

juju offer postgresql-k8s:async-primary async-primary

juju switch psql-2

juju offer postgresql-k8s:async-primary async-primary

Then, we consume in each model the async-primary relation:

juju consume admin/psql-2.async-primary

juju relate -m psql-1 postgresql-k8s:async-replica async-primary

juju consume admin/psql-1.async-primary

juju relate -m psql-2 postgresql-k8s:async-replica async-primary

Once that setup is done, the postgresql apps knows there is an async replication available, but will not implement the actual configuration. That will happen once we run the promote-standby-cluster action on one of the models.

At that moment, the model where the action ran should take over and become the primary. The remaining will continue as replicas.

At switchover, the user must initiate the process. That should be:

juju run -m <model-with-old-primary> postgresql-k8s/leader demote-primary-cluster

juju run -m <mode-with-new-primary> postgresql-k8s/leader promote-standby-cluster

The demote should not be successful if the target unit sees a cluster as “primary” still connected in its async-replica relation.

In case of failover, depending on the state of the primary cluster, we may end up with Juju knowing about the primary cluster and having its databag (with the primary key set); but the cluster is gone. Indeed, we would need here to pull the relation out first, possibly with --force, then promote one of the replica clusters as leader.