I followed this guide https://ubuntu.com/kubernetes/docs/install-local to install Charmed Kubernetes via Juju on my server.

After many trials and errors I finally have the installation where everything is running and active, except the Kubernetes-Master is stuck in a waiting state: Waiting for 3 kube-system pods to start

I retrieved the logs from the Kubernetes-Master with: juju ssh kubernetes-master/0 and

sudo pastebinit /var/log/juju/unit-kubernetes-master-0.log

The result can be found here: https://paste.ubuntu.com/p/qgvQwzZTrP/

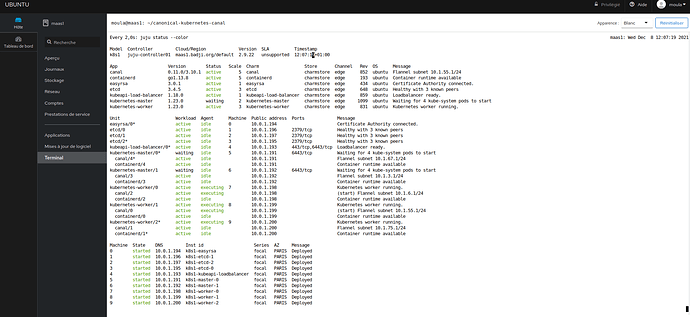

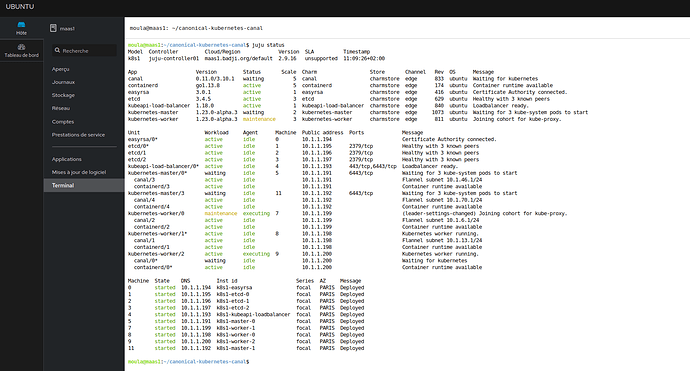

juju status output:

Model Controller Cloud/Region Version SLA Timestamp

k8s localhost-localhost localhost/localhost 2.8.7 unsupported 22:35:04+01:00

App Version Status Scale Charm Store Rev OS Notes

containerd 1.3.3 active 5 containerd jujucharms 100 ubuntu

easyrsa 3.0.1 active 1 easyrsa jujucharms 342 ubuntu

etcd 3.4.5 active 3 etcd jujucharms 546 ubuntu

flannel 0.11.0 active 5 flannel jujucharms 514 ubuntu

kubeapi-load-balancer 1.18.0 active 1 kubeapi-load-balancer jujucharms 754 ubuntu exposed

kubernetes-master 1.20.1 waiting 2 kubernetes-master jujucharms 926 ubuntu

kubernetes-worker 1.20.1 active 3 kubernetes-worker jujucharms 718 ubuntu exposed

Unit Workload Agent Machine Public address Ports Message

easyrsa/0* active idle 0 10.100.99.131 Certificate Authority connected.

etcd/0* active idle 1 10.100.99.120 2379/tcp Healthy with 3 known peers

etcd/1 active idle 2 10.100.99.182 2379/tcp Healthy with 3 known peers

etcd/2 active idle 3 10.100.99.121 2379/tcp Healthy with 3 known peers

kubeapi-load-balancer/0* active idle 4 10.100.99.136 443/tcp Loadbalancer ready.

kubernetes-master/0* waiting idle 5 10.100.99.199 6443/tcp Waiting for 3 kube-system pods to start

containerd/4 active idle 10.100.99.199 Container runtime available

flannel/4 active idle 10.100.99.199 Flannel subnet 10.1.25.1/24

kubernetes-master/1 waiting idle 6 10.100.99.45 6443/tcp Waiting for 3 kube-system pods to start

containerd/3 active idle 10.100.99.45 Container runtime available

flannel/3 active idle 10.100.99.45 Flannel subnet 10.1.70.1/24

kubernetes-worker/0 active idle 7 10.100.99.141 80/tcp,443/tcp Kubernetes worker running.

containerd/1 active idle 10.100.99.141 Container runtime available

flannel/1 active idle 10.100.99.141 Flannel subnet 10.1.53.1/24

kubernetes-worker/1 active idle 8 10.100.99.183 80/tcp,443/tcp Kubernetes worker running.

containerd/2 active idle 10.100.99.183 Container runtime available

flannel/2 active idle 10.100.99.183 Flannel subnet 10.1.77.1/24

kubernetes-worker/2* active idle 9 10.100.99.6 80/tcp,443/tcp Kubernetes worker running.

containerd/0* active idle 10.100.99.6 Container runtime available

flannel/0* active idle 10.100.99.6 Flannel subnet 10.1.12.1/24

Machine State DNS Inst id Series AZ Message

0 started 10.100.99.131 juju-ddaa0b-0 focal Running

1 started 10.100.99.120 juju-ddaa0b-1 focal Running

2 started 10.100.99.182 juju-ddaa0b-2 focal Running

3 started 10.100.99.121 juju-ddaa0b-3 focal Running

4 started 10.100.99.136 juju-ddaa0b-4 focal Running

5 started 10.100.99.199 juju-ddaa0b-5 focal Running

6 started 10.100.99.45 juju-ddaa0b-6 focal Running

7 started 10.100.99.141 juju-ddaa0b-7 focal Running

8 started 10.100.99.183 juju-ddaa0b-8 focal Running

9 started 10.100.99.6 juju-ddaa0b-9 focal Running

No, Really there is a problem with the masters nodes. the Workers even with the GPU deploy correctly. Thank’s.

No, Really there is a problem with the masters nodes. the Workers even with the GPU deploy correctly. Thank’s.