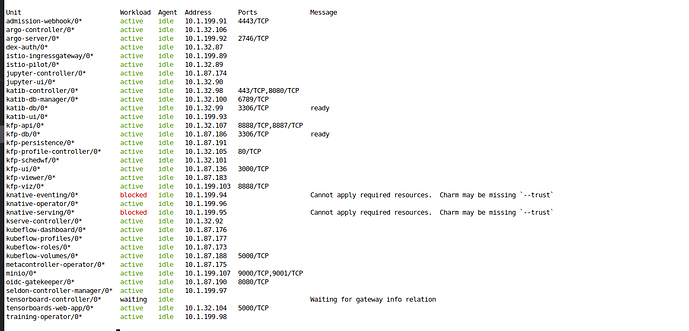

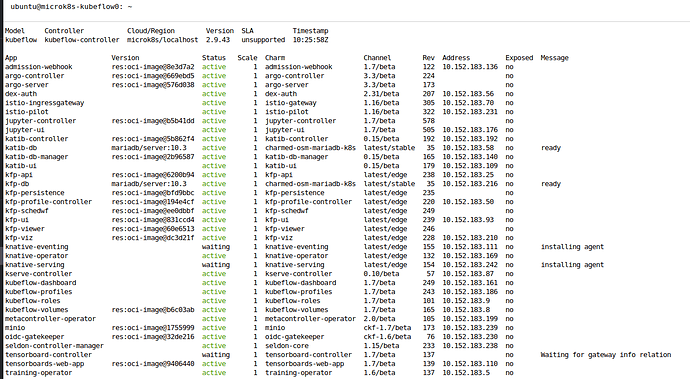

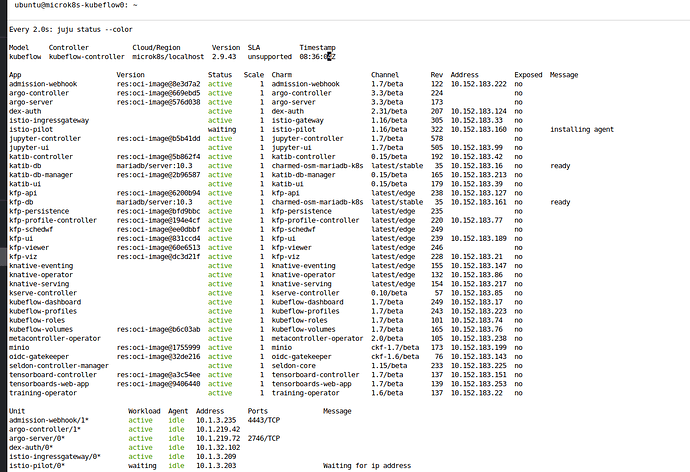

Charmed Kubeflow 1.7 beta has the following known issues:

Pods in error state with message too many open files

In a microk8s environment, sometimes the containers go into an error state with the message too many open files. If that is the case execute the following commands in your system and restart any pod in error state.

sudo sysctl fs.inotify.max_user_instances=1280

sudo sysctl fs.inotify.max_user_watches=655360

Tensorboards-controller stuck in waiting state

If the tensorboard-controller unit stays in waiting status with message Waiting for gateway info relation for a long period of time, you should run the following command:

juju run --unit istio-pilot/0 -- "export JUJU_DISPATCH_PATH=hooks/config-changed; ./dispatch"

Associated issue

#32, but the bug is attributed to istio-pilot, see below for more information.

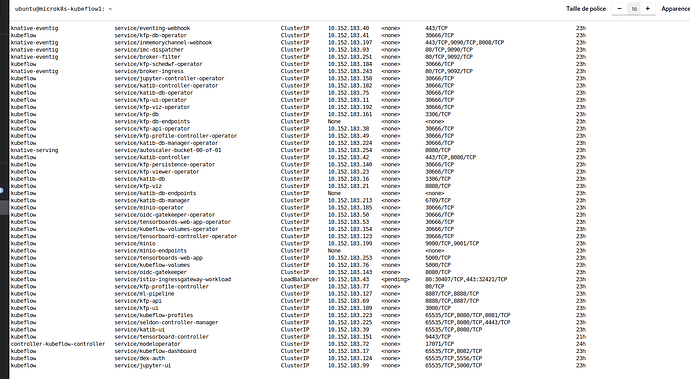

The default gateway is not created

Errors you may encounter that could be related to this issue:

-

tensorboard-controller is stuck in “Waiting for gateway info relation”

- The dashboard is unreachable after setting up

dex-auth and oidc-gatekeeper

This issue prevents the kubeflow-gateway creation. To check if this issue is affecting your deployment, check if the kubeflow-gateway is there:

kubectl get gateway -nkubeflow kubeflow-gateway

If you get no output from the above command, please run the following and wait for istio-pilot to be active and idle.

juju run --unit istio-pilot/0 -- "export JUJU_DISPATCH_PATH=hooks/config-changed; ./dispatch"

Associated issue

#113

Notebooks appears to not have access to pipelines

If you create a notebook (any framework) and you try to connect to pipelines through the API (e.g. using create_run_from_pipeline_func()) you may hit the following

ERROR:root:Failed to read a token from file '/var/run/secrets/kubeflow/pipelines/token'

If that is the case, make sure you have selected the “Allow access to Kubeflow Pipelines” in the “Advanced section” in the creation page.

Associated issue

#159, the issue has been labeled as a regression from 1.6 and will be fixed soon.

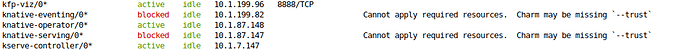

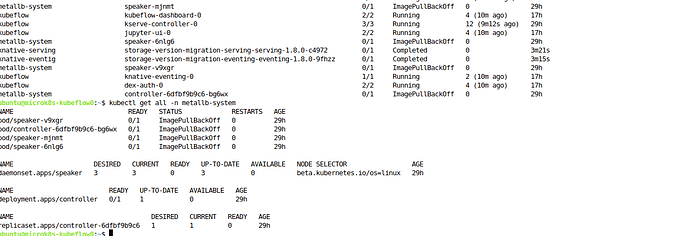

knative-serving and knative-eventing are in error state

These charms might be missing some configuration, please run the following to change their state:

For knative-serving

juju config knative-serving namespace="knative-serving" istio.gateway.namespace=kubeflow istio.gateway.name=kubeflow-gateway

juju resolved knative-serving/<unit-number>

For knative-eventing

juju config knative-eventing namespace="knative-eventing"

juju resolved knative-eventing/<unit-number>

Please note this workaround only applies in the Charmed Kubeflow context, if you are deploying standalone, please follow the instructions in the README.

Some units are stuck in blocked status

If the charm belongs to this list:

- KubeflowProfiles

- KubeflowDashboard

- Seldon

- TrainingOperator

- MLflow

Please refer to the associated issue below for more information.

Associated issue

#549

.

Needs commands to fix it.

.

Needs commands to fix it.

I feel your pain.

I feel your pain.