Introduction

One of the problems that someone will come across when they begin to work with the juju code-base is the fact that it has organically evolved over several years. As such, different parts of the code-base use different design patterns (some good, some questionable) thus making the code-base hard to comprehend and refactor.

With this post, I would like to focus on one of the key (in my view) issues with juju which has quite a few repercussions in the overall quality and testability of juju itself: the fact that business logic is inextricably coupled with the underlying persistence layer.

To clarify, by coupling, I don’t mean passing simply DB instances around but rather having our business logic generate low-level mongo transactions! This makes it not only impossible (for now) to switch to a different data store in the future, but it also makes testing really complicated as explained in other discourse posts (TLDR: all tests are essentially integration tests that interact with the database).

Up to the recent years, this coupling seemed to be constrained within the state package. However, recently, low-level DB implementation details have started to leak to other packages. One such example would be the apiserver package which hosts the facade code that implements juju’s client API. This observation has led me to publish this post.

Can we do any better?

In my opinion, yes! With this post, I would like to propose a different modeling and code organization approach that could potentially resolve the aforementioned issues. The proposal exploits the observation that juju is primarily an event-driven system: the business logic applies a bunch of rules to manipulate the contents of our database. The juju workers, watch particular collections in the database for changes and apply any required actions to sync their current state to the one recorded in the database.

The proposal aims to:

- Completely decouple the business logic from the underlying data store implementation. By abstracting the data store behind an interface we gain the benefit of being able to switch to a different data store in the future (e.g. dqlite).

- Enforce strict vertical boundaries between the api-server facade code and the business logic layer.

- Write significantly more unit-tests for our packages (mocking their dependencies) and judiciously use e2e and bash-based integration tests to test the system as a whole.

- Allow us to use the proposed way for new feature work while also enabling us to incrementally untangle and port existing business logic to the new system.

How would this work?

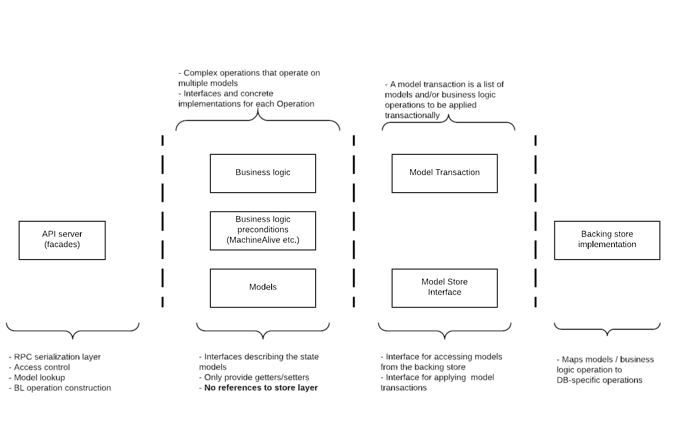

The following figure summarizes the vertical boundaries introduced by this proposal and the responsibilities encapsulated within each boundary.

Models

The first step would be to define interfaces for the various models used by juju (e.g. a Machine, a Unit). These interfaces define the required getters and setters for working with the models with the following restrictions:

- Models do not provide accessors for retrieving other models_. This makes the implementation easier (you can get these via the store; see below) and more akin to a lightweight ORM.

- Model definitions never reference any store-related packages (they can of course import and use juju-native types such as the ones in the

corepackage).

You might be asking: why interfaces and not POGOS (plain old Go structs)? The answer is that each store implementation may want to keep track of store-specific auxiliary data in each model instance. For instance, our mongo implementation associates a transaction revision number (TxnRevno) to each document and uses it to implement client-side transactions. Using interfaces allows us to encapsulate this store-specific information and prevent it from leaking.

Preconditions

Preconditions describe a set of conditions that must be met in order for a list of model mutations to be applied. Juju already has this concept but our existing implementation conflates business logic conditions (entity X must be alive, machine Y should have an address in space Z etc.) with low-level conditions (the recorded transaction revision should be W, document D must NOT exist etc.).

By this proposal, the business logic-related conditions will be moved to their own dedicated package and would not contain any implementation details. Instead, they would simply define a precondition type (e.g. MachineAlive) and record metadata as to what entity they apply to.

It will be the task of the store implementation to map these preconditions into low-level db-specific primitives.

Operations

Assuming that the appropriate store implementation is injected into the facade code, an API method could perform its ACL checks, then lookup a model it needs to work with, mutate its fields and persist it back to the store.

However, if the operation is more complex (e.g. it involves special checks, mutates multiple models etc.), it becomes part of our business logic and should be implemented (and tested!) outside the facade code.

To this end, the proposal introduces the concept of an Operation. Operations live in their own dedicated package, have access to a store instance (they most probably need to look up models) and provide a specialized API for setting up the operation (see next sections for an example).

The key concept here is that Operations expose a method (Changes) that yields a list of Models, Preconditions and/or other Operations (to be recursively expanded).

The operations package defines an interface for each operation as well as a concrete implementation and a constructor for each operation type.

Model transactions

As mentioned above, given juju’s event-driven nature, the business logic is essentially mutating one or more models and persisting them to the backing store. Instead of using the mongo transaction mechanism (i.e. generate txn.Op entries in the business logic code), a new concept will be introduced as a replacement, Model Transactions.

A model transaction is a list of changes to be applied atomically. It consists of:

- Models

- Preconditions

- Operations (to be recursively expanded)

The store implementation is responsible for mapping each item in a model transaction into a list of db-specific operations and ensuring that they are all implemented atomically.

In Go, we can define an interface that must be implemented by items that can be added to a transaction and then provide helper types that can be embedded into our concrete implementations to make it easy to tag things that can be part of a transaction (and have the Go compiler enforce this for us at compile-time). For more details see the last section.

The store interface and implementation

The model store is defined as an interface consisting of several FindXByY kind of methods that yield model instances as well as an ApplyTxn method which is the API exposed for applying model transactions.

The store defines its own db-specific models which implement the appropriate interfaces from the model package and contains logic to map preconditions to low-level DB operations. Additionally, the store is responsible for (recursively) expanding Operations and for raising errors if it encounters an transaction element that it cannot handle.

As you probably expect, the appropriate store instance is injected into our facade code and used for implementing our APIs.

How does this affect our testing patterns?

Given the interface-driven approach taken by this proposal, we can finally introduce real unit testing in each one of our layers:

- At the store layer we can run all tests to ensure data is properly read from/ written to the database. Moreover, we can introduce guard tests that utilize reflection to ensure that each data store implementation supports mapping each one of the defined preconditions.

- At the operation (business logic) layer, we can mock both the model store and any obtained models and focus our tests exclusively to the business logic internals (i.e. with params X,Y,Z we get this set of changes out).

- At the facade layer, we can mock the store, the operations and the required models thus ensuring we test the ACL logic and the correct construction of the required model operations.

- End-to-end tests and bash-based integration tests can test the system end-to-end

This approach would most likely increase our test coverage as we will be able to focus on the business logic (and edge cases) only without having to set up elaborate test suites to get our test fixtures in a particular state. What’s more, as only the store layer tests would interact with a real DB instance, this approach would have a significant impact in the time it takes to run our test suites (both locally and on CI).

TLDR; just show me the code!

To kick-start a discussion around this proposal, I have created a branch that applies the concepts from this proposal to a small self-contained example based on the recent refactoring work for manipulating the open port ranges on a machine.

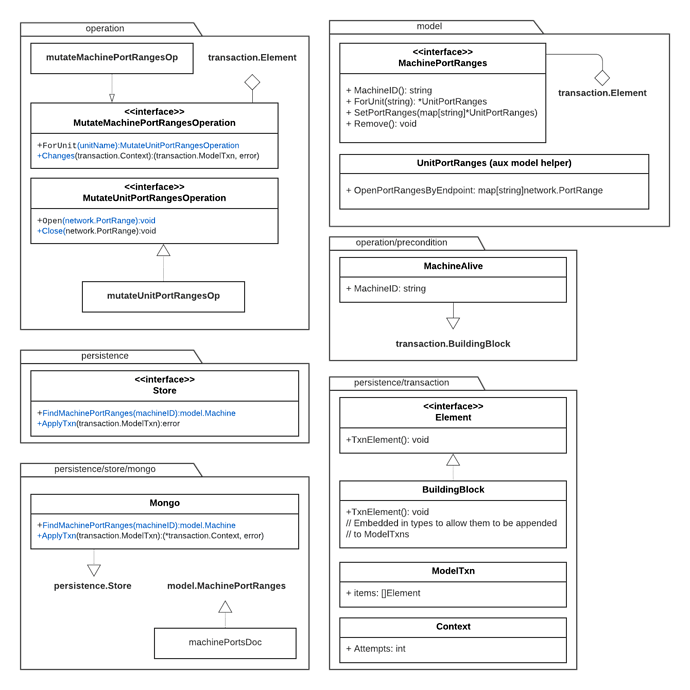

And since everyone loves UML, here is how the example code is structured:

You can find the code here. I would recommend examining the example.go file which simulates an API facade and see how everything fits together. Note that this is just a quick&dirty (but fully functional) example that I put together. It can obviously be refined into something better if we want to pursue something like this in the future.

Looking forward to your comments!